Adaptive Background Matting Using Background Matching

Paper and Code

Mar 14, 2022

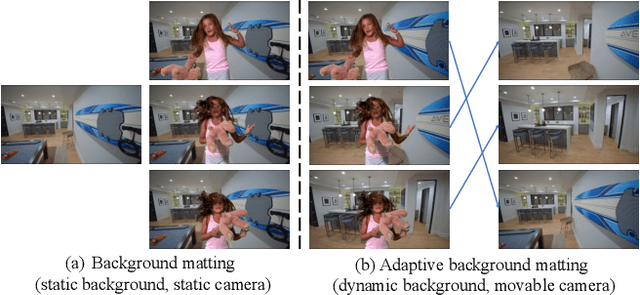

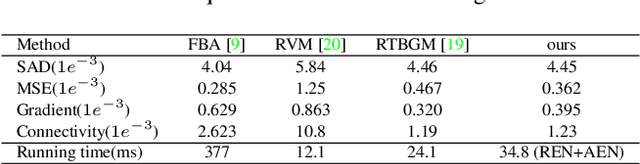

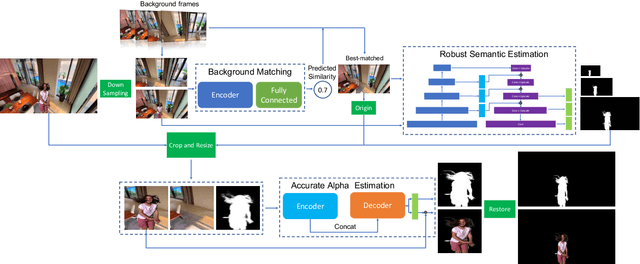

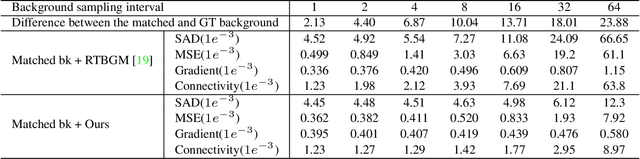

Due to the difficulty of solving the matting problem, lots of methods use some kinds of assistance to acquire high quality alpha matte. Green screen matting methods rely on physical equipment. Trimap-based methods take manual interactions as external input. Background-based methods require a pre-captured, static background. The methods are not flexible and convenient enough to use widely. Trimap-free methods are flexible but not stable in complicated video applications. To be stable and flexible in real applications, we propose an adaptive background matting method. The user first captures their videos freely, moving the cameras. Then the user captures the background video afterwards, roughly covering the previous captured regions. We use dynamic background video instead of static background for accurate matting. The proposed method is convenient to use in any scenes as the static camera and background is no more the limitation. To achieve this goal, we use background matching network to find the best-matched background frame by frame from dynamic backgrounds. Then, robust semantic estimation network is used to estimate the coarse alpha matte. Finally, we crop and zoom the target region according to the coarse alpha matte, and estimate the final accurate alpha matte. In experiments, the proposed method is able to perform comparably against the state-of-the-art matting methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge