Adaptive ABAC Policy Learning: A Reinforcement Learning Approach

Paper and Code

May 18, 2021

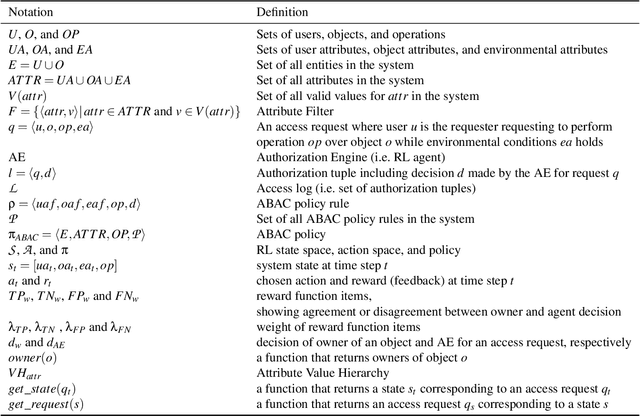

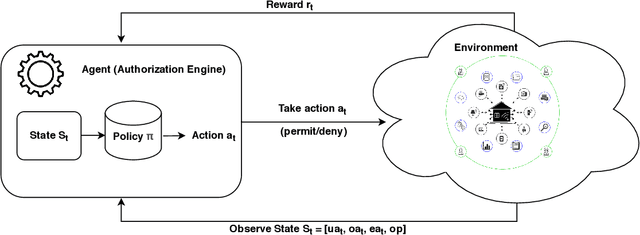

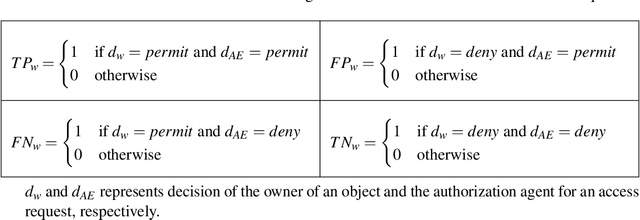

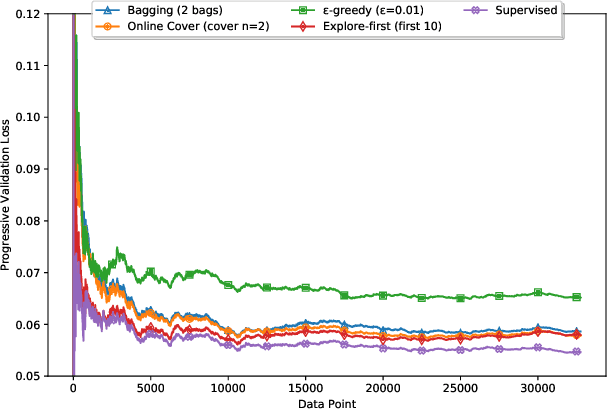

With rapid advances in computing systems, there is an increasing demand for more effective and efficient access control (AC) approaches. Recently, Attribute Based Access Control (ABAC) approaches have been shown to be promising in fulfilling the AC needs of such emerging complex computing environments. An ABAC model grants access to a requester based on attributes of entities in a system and an authorization policy; however, its generality and flexibility come with a higher cost. Further, increasing complexities of organizational systems and the need for federated accesses to their resources make the task of AC enforcement and management much more challenging. In this paper, we propose an adaptive ABAC policy learning approach to automate the authorization management task. We model ABAC policy learning as a reinforcement learning problem. In particular, we propose a contextual bandit system, in which an authorization engine adapts an ABAC model through a feedback control loop; it relies on interacting with users/administrators of the system to receive their feedback that assists the model in making authorization decisions. We propose four methods for initializing the learning model and a planning approach based on attribute value hierarchy to accelerate the learning process. We focus on developing an adaptive ABAC policy learning model for a home IoT environment as a running example. We evaluate our proposed approach over real and synthetic data. We consider both complete and sparse datasets in our evaluations. Our experimental results show that the proposed approach achieves performance that is comparable to ones based on supervised learning in many scenarios and even outperforms them in several situations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge