Adaptation to Misspecified Kernel Regularity in Kernelised Bandits

Paper and Code

Apr 26, 2023

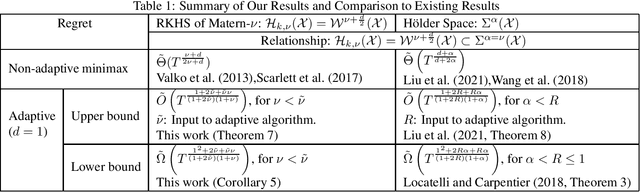

In continuum-armed bandit problems where the underlying function resides in a reproducing kernel Hilbert space (RKHS), namely, the kernelised bandit problems, an important open problem remains of how well learning algorithms can adapt if the regularity of the associated kernel function is unknown. In this work, we study adaptivity to the regularity of translation-invariant kernels, which is characterized by the decay rate of the Fourier transformation of the kernel, in the bandit setting. We derive an adaptivity lower bound, proving that it is impossible to simultaneously achieve optimal cumulative regret in a pair of RKHSs with different regularities. To verify the tightness of this lower bound, we show that an existing bandit model selection algorithm applied with minimax non-adaptive kernelised bandit algorithms matches the lower bound in dependence of $T$, the total number of steps, except for log factors. By filling in the regret bounds for adaptivity between RKHSs, we connect the statistical difficulty for adaptivity in continuum-armed bandits in three fundamental types of function spaces: RKHS, Sobolev space, and H\"older space.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge