Accelerated Algorithms for Monotone Inclusions and Constrained Nonconvex-Nonconcave Min-Max Optimization

Paper and Code

Jun 10, 2022

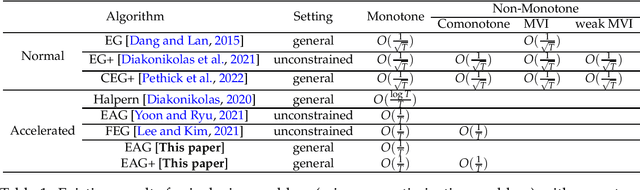

We study monotone inclusions and monotone variational inequalities, as well as their generalizations to non-monotone settings. We first show that the Extra Anchored Gradient (EAG) algorithm, originally proposed by Yoon and Ryu [2021] for unconstrained convex-concave min-max optimization, can be applied to solve the more general problem of Lipschitz monotone inclusion. More specifically, we prove that the EAG solves Lipschitz monotone inclusion problems with an \emph{accelerated convergence rate} of $O(\frac{1}{T})$, which is \emph{optimal among all first-order methods} [Diakonikolas, 2020, Yoon and Ryu, 2021]. Our second result is a new algorithm, called Extra Anchored Gradient Plus (EAG+), which not only achieves the accelerated $O(\frac{1}{T})$ convergence rate for all monotone inclusion problems, but also exhibits the same accelerated rate for a family of general (non-monotone) inclusion problems that concern negative comonotone operators. As a special case of our second result, EAG+ enjoys the $O(\frac{1}{T})$ convergence rate for solving a non-trivial class of nonconvex-nonconcave min-max optimization problems. Our analyses are based on simple potential function arguments, which might be useful for analysing other accelerated algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge