AAPMT: AGI Assessment Through Prompt and Metric Transformer

Paper and Code

Mar 28, 2024

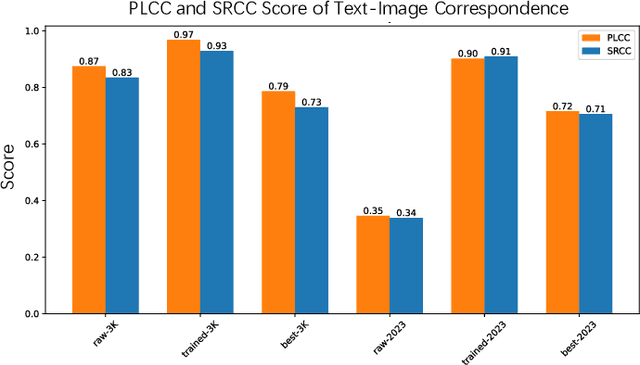

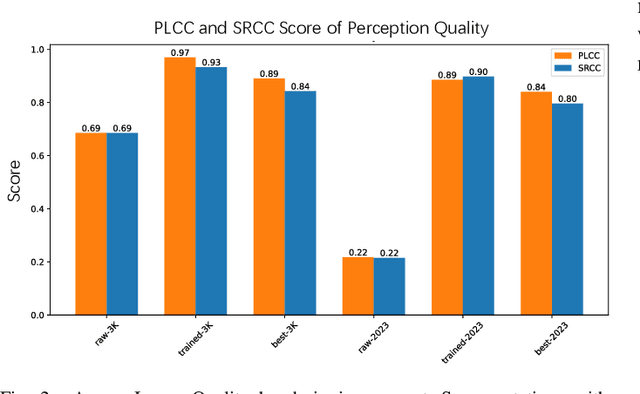

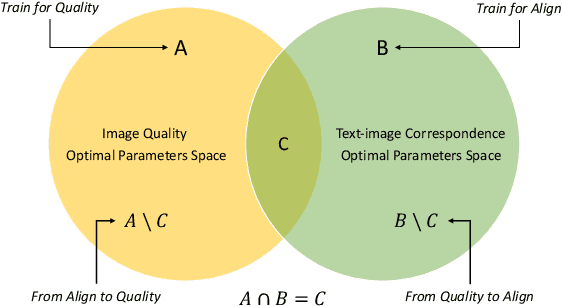

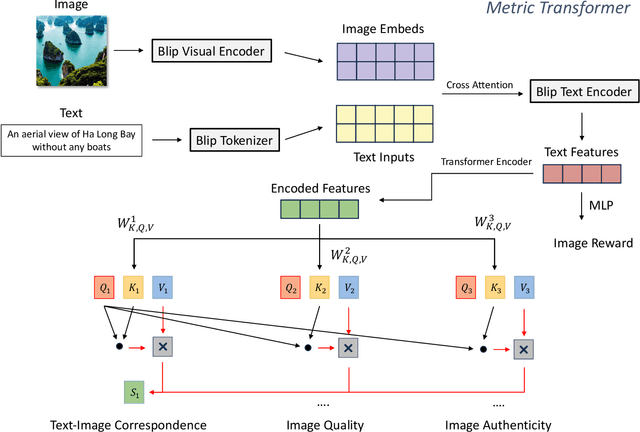

The emergence of text-to-image models marks a significant milestone in the evolution of AI-generated images (AGIs), expanding their use in diverse domains like design, entertainment, and more. Despite these breakthroughs, the quality of AGIs often remains suboptimal, highlighting the need for effective evaluation methods. These methods are crucial for assessing the quality of images relative to their textual descriptions, and they must accurately mirror human perception. Substantial progress has been achieved in this domain, with innovative techniques such as BLIP and DBCNN contributing significantly. However, recent studies, including AGIQA-3K, reveal a notable discrepancy between current methods and state-of-the-art (SOTA) standards. This gap emphasizes the necessity for a more sophisticated and precise evaluation metric. In response, our objective is to develop a model that could give ratings for metrics, which focuses on parameters like perceptual quality, authenticity, and the correspondence between text and image, that more closely aligns with human perception. In our paper, we introduce a range of effective methods, including prompt designs and the Metric Transformer. The Metric Transformer is a novel structure inspired by the complex interrelationships among various AGI quality metrics. The code is available at https://github.com/huskydoge/CS3324-Digital-Image-Processing/tree/main/Assignment1

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge