A Unit-Consistent Tensor Completion with Applications in Recommender Systems

Paper and Code

Apr 04, 2022

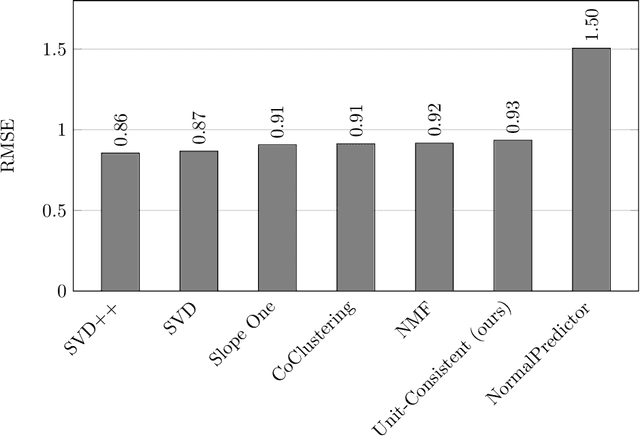

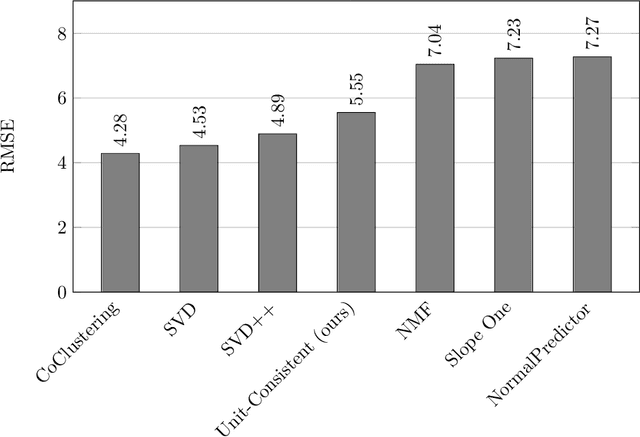

In this paper we introduce a new consistency-based approach for defining and solving nonnegative/positive matrix and tensor completion problems. The novelty of the framework is that instead of artificially making the problem well-posed in the form of an application-arbitrary optimization problem, e.g., minimizing a bulk structural measure such as rank or norm, we show that a single property/constraint - preserving unit-scale consistency - guarantees both existence of a solution and, under relatively weak support assumptions, uniqueness. The framework and solution algorithms also generalize directly to tensors of arbitrary dimension while maintaining computational complexity that is linear in problem size for fixed dimension d. In the context of recommender system (RS) applications, we prove that two reasonable properties that should be expected to hold for any solution to the RS problem are sufficient to permit uniqueness guarantees to be established within our framework. This is remarkable because it obviates the need for heuristic-based statistical or AI methods despite what appear to be distinctly human/subjective variables at the heart of the problem. Key theoretical contributions include a general unit-consistent tensor-completion framework with proofs of its properties, including algorithms with optimal runtime complexity, e.g., O(1) term-completion with preprocessing complexity that is linear in the number of known terms of the matrix/tensor.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge