A Two stage Adaptive Knowledge Transfer Evolutionary Multi-tasking Based on Population Distribution for Multi/Many-Objective Optimization

Paper and Code

Jan 03, 2020

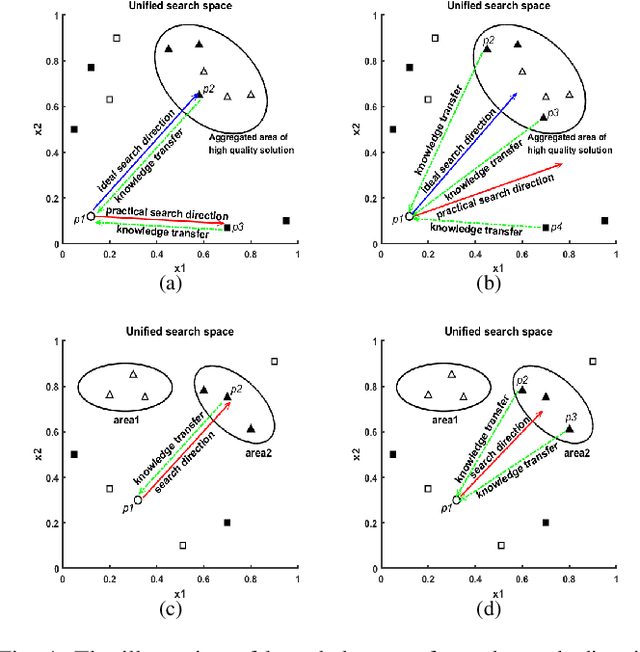

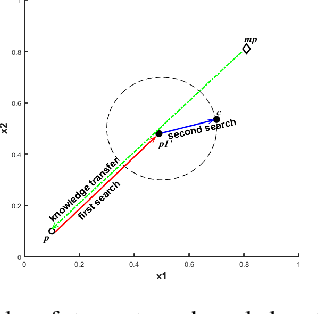

Multi-tasking optimization can usually achieve better performance than traditional single-tasking optimization through knowledge transfer between tasks. However, current multi-tasking optimization algorithms have some deficiencies. For high similarity problems, the knowledge that can accelerate the convergence rate of tasks has not been utilized fully. For low similarity problems, the probability of generating negative transfer is high, which may result in optimization performance degradation. In addition, some knowledge transfer methods proposed previously do not fully consider how to deal with the situation in which the population falls into local optimum. To solve these issues, a two stage adaptive knowledge transfer evolutionary multi-tasking optimization algorithm based on population distribution, labeled as EMT-PD, is proposed. EMT-PD can accelerate and improve the convergence performance of tasks based on the knowledge extracted from the probability model that reflects the search trend of the whole population. At the first transfer stage, an adaptive weight is used to adjust the step size of individual's search, which can reduce the impact of negative transfer. At the second stage of knowledge transfer, the individual's search range is further adjusted dynamically, which can increase the diversity of population and beneficial for jumping out of local optimum. Experimental results on multi-tasking multi-objective optimization test suites show that EMT-PD is superior to six state-of-the-art optimization algorithms. In order to further investigate the effectiveness of EMT-PD on many-objective optimization problems, a multi-tasking many-objective test suite is designed. The experimental results on it also demonstrate that EMT-PD has obvious competitiveness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge