A Trilogy of AI Safety Frameworks: Paths from Facts and Knowledge Gaps to Reliable Predictions and New Knowledge

Paper and Code

Oct 09, 2024

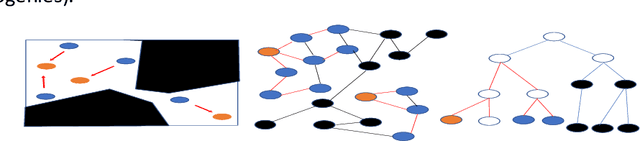

AI Safety has become a vital front-line concern of many scientists within and outside the AI community. There are many immediate and long term anticipated risks that range from existential risk to human existence to deep fakes and bias in machine learning systems [1-5]. In this paper, we reduce the full scope and immense complexity of AI safety concerns to a trilogy of three important but tractable opportunities for advances that have the short-term potential to improve AI safety and reliability without reducing AI innovation in critical domains. In this perspective, we discuss this vision based on several case studies that already produced proofs of concept in critical ML applications in biomedical science.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge