A Test for Shared Patterns in Cross-modal Brain Activation Analysis

Paper and Code

Oct 08, 2019

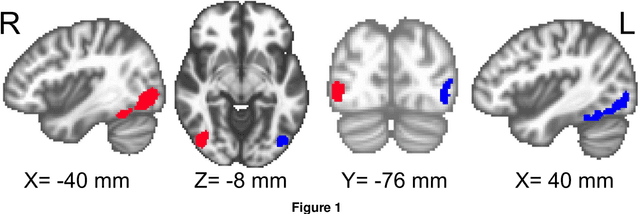

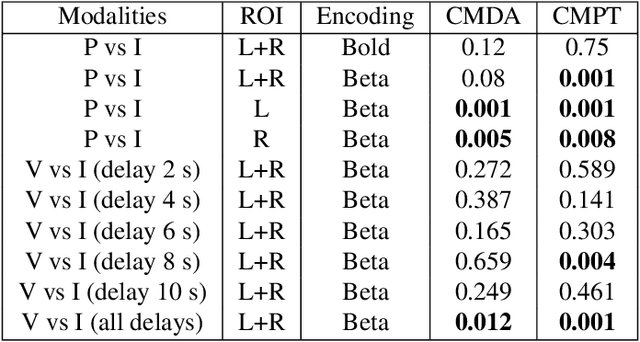

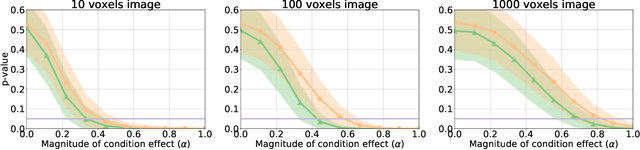

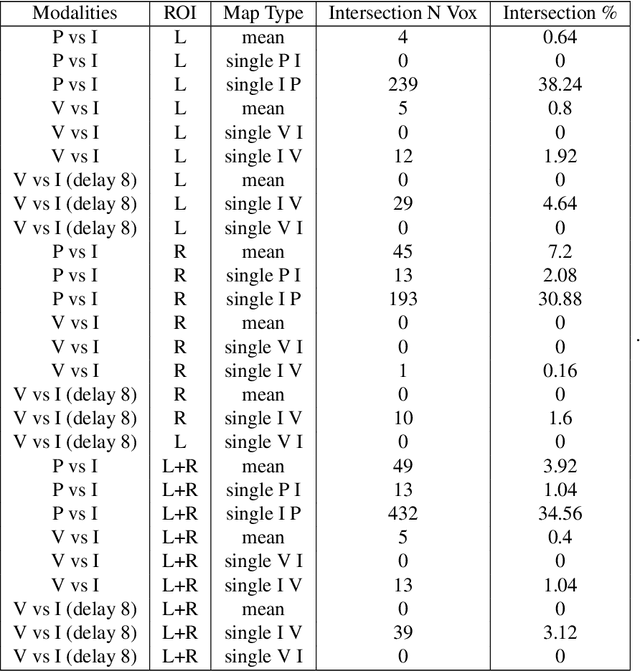

Determining the extent to which different cognitive modalities (understood here as the set of cognitive processes underlying the elaboration of a stimulus by the brain) rely on overlapping neural representations is a fundamental issue in cognitive neuroscience. In the last decade, the identification of shared activity patterns has been mostly framed as a supervised learning problem. For instance, a classifier is trained to discriminate categories (e.g. faces vs. houses) in modality I (e.g. perception) and tested on the same categories in modality II (e.g. imagery). This type of analysis is often referred to as cross-modal decoding. In this paper we take a different approach and instead formulate the problem of assessing shared patterns across modalities within the framework of statistical hypothesis testing. We propose both an appropriate test statistic and a scheme based on permutation testing to compute the significance of this test while making only minimal distributional assumption. We denote this test cross-modal permutation test (CMPT). We also provide empirical evidence on synthetic datasets that our approach has greater statistical power than the cross-modal decoding method while maintaining low Type I errors (rejecting a true null hypothesis). We compare both approaches on an fMRI dataset with three different cognitive modalities (perception, imagery, visual search). Finally, we show how CMPT can be combined with Searchlight analysis to explore spatial distribution of shared activity patterns.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge