A study of first-passage time minimization via Q-learning in heated gridworlds

Paper and Code

Oct 05, 2021

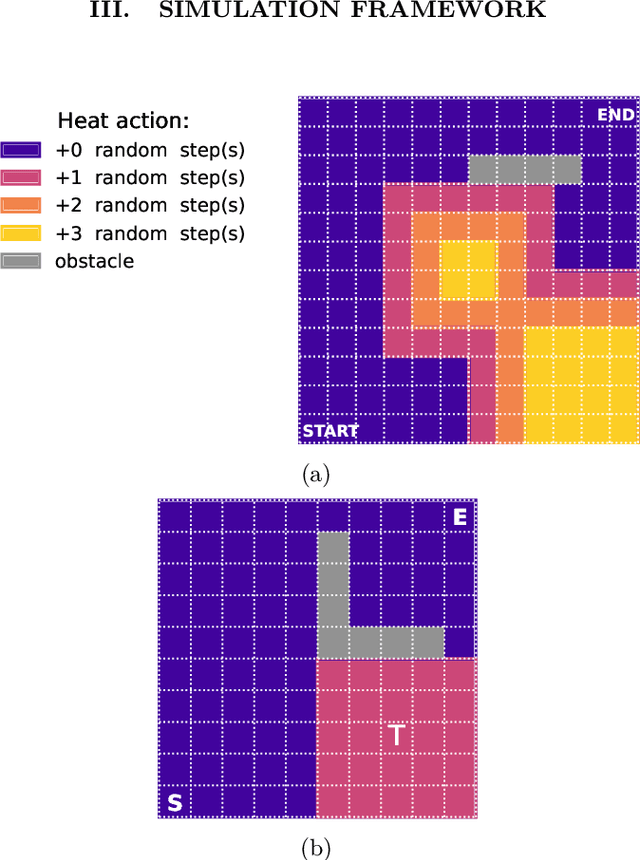

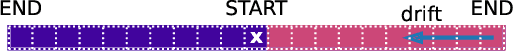

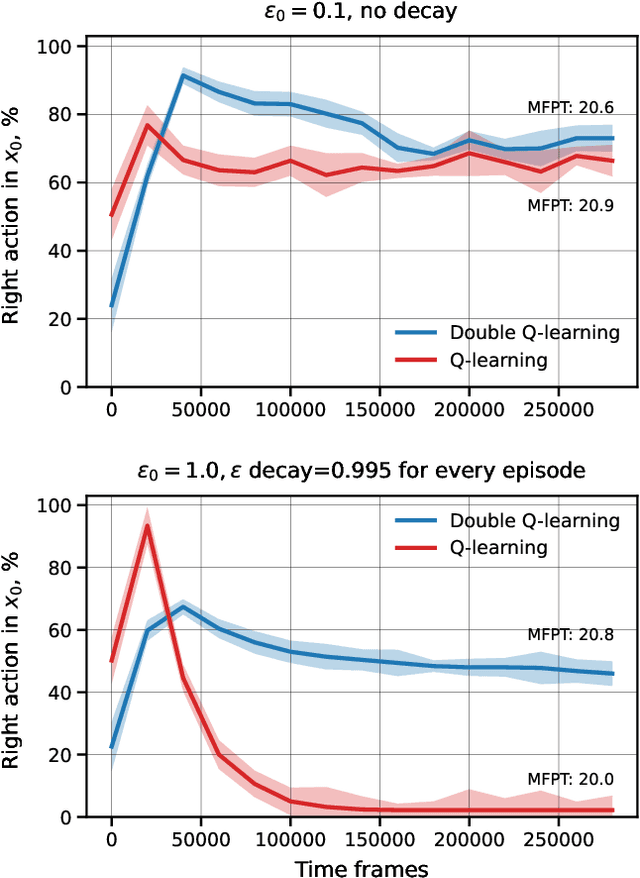

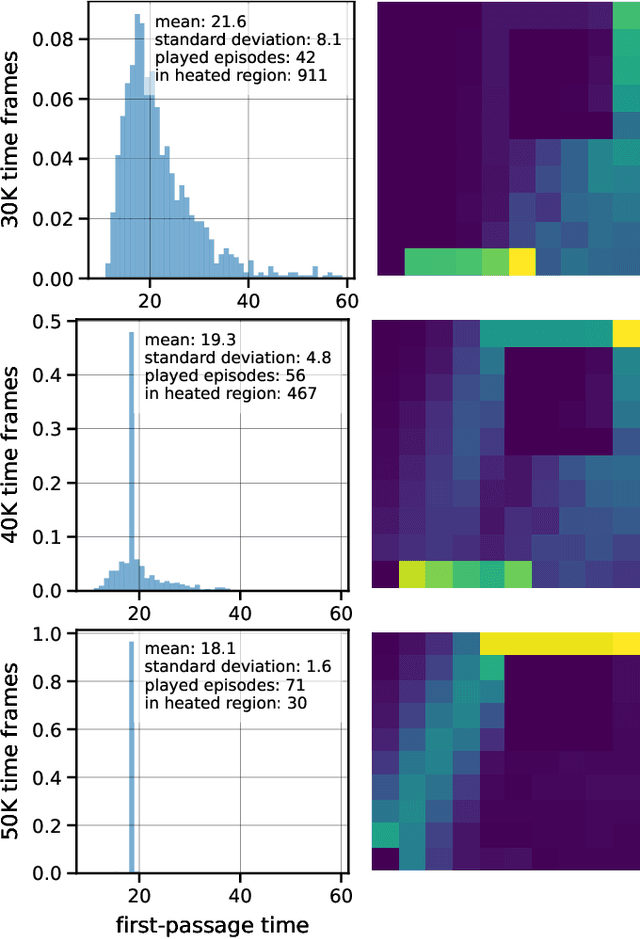

Optimization of first-passage times is required in applications ranging from nanobots navigation to market trading. In such settings, one often encounters unevenly distributed noise levels across the environment. We extensively study how a learning agent fares in 1- and 2- dimensional heated gridworlds with an uneven temperature distribution. The results show certain bias effects in agents trained via simple tabular Q-learning, SARSA, Expected SARSA and Double Q-learning. While high learning rate prevents exploration of regions with higher temperature, low enough rate increases the presence of agents in such regions. The discovered peculiarities and biases of temporal-difference-based reinforcement learning methods should be taken into account in real-world physical applications and agent design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge