A Singular Value Perspective on Model Robustness

Paper and Code

Dec 07, 2020

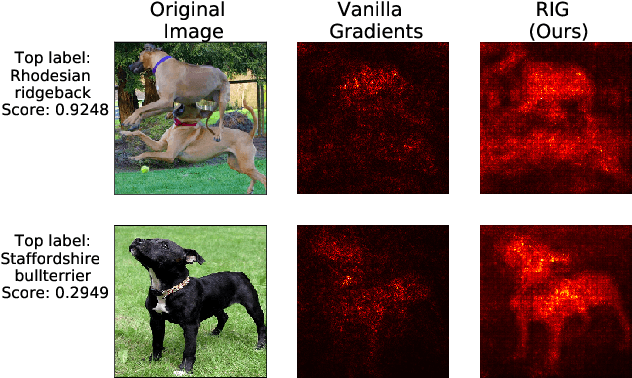

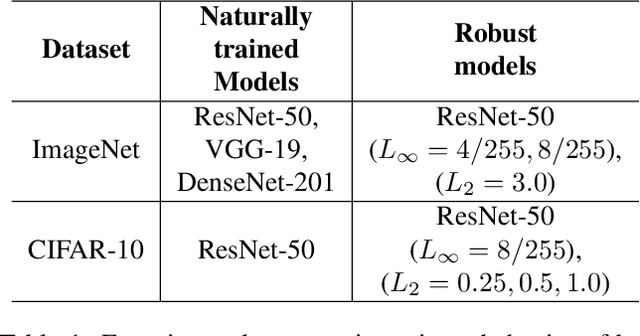

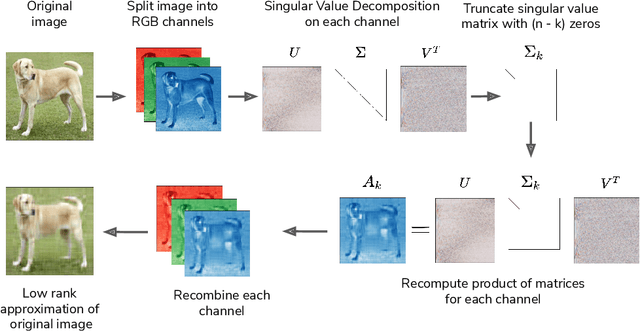

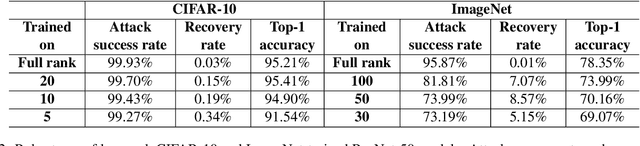

Convolutional Neural Networks (CNNs) have made significant progress on several computer vision benchmarks, but are fraught with numerous non-human biases such as vulnerability to adversarial samples. Their lack of explainability makes identification and rectification of these biases difficult, and understanding their generalization behavior remains an open problem. In this work we explore the relationship between the generalization behavior of CNNs and the Singular Value Decomposition (SVD) of images. We show that naturally trained and adversarially robust CNNs exploit highly different features for the same dataset. We demonstrate that these features can be disentangled by SVD for ImageNet and CIFAR-10 trained networks. Finally, we propose Rank Integrated Gradients (RIG), the first rank-based feature attribution method to understand the dependence of CNNs on image rank.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge