A Sensitivity Analysis of Pathfinder: A Follow-up Study

Paper and Code

Mar 20, 2013

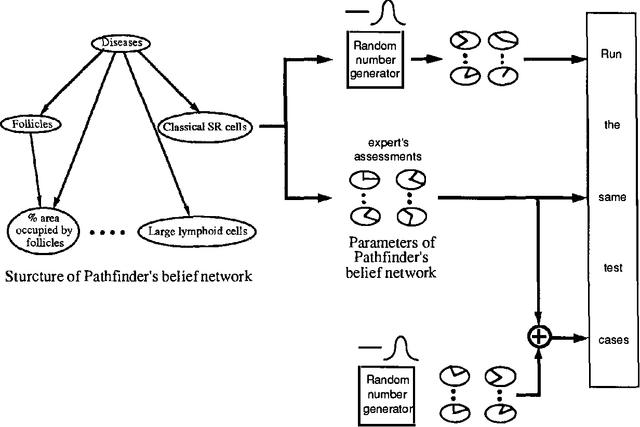

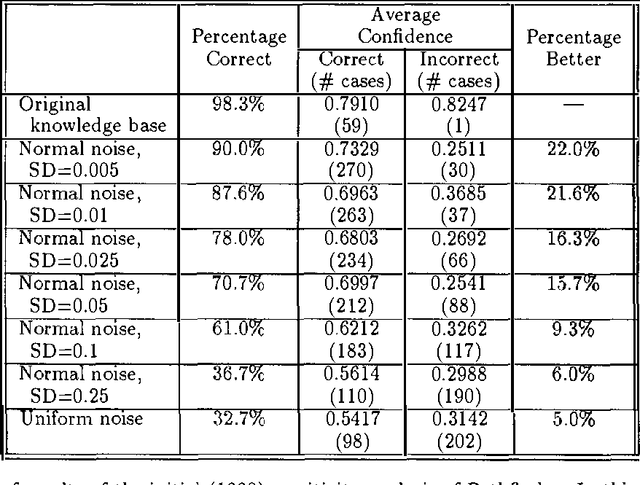

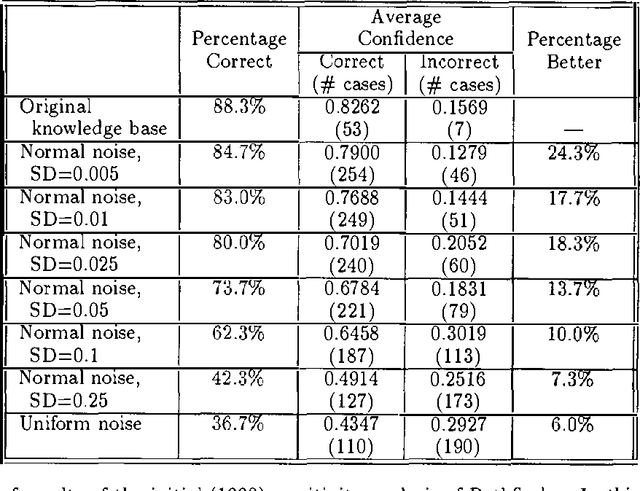

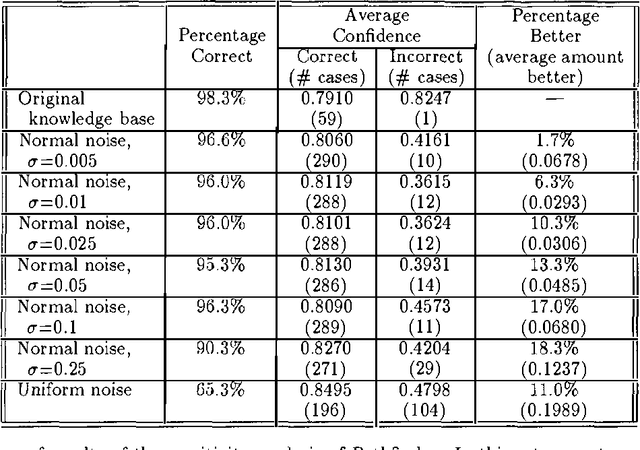

At last year?s Uncertainty in AI Conference, we reported the results of a sensitivity analysis study of Pathfinder. Our findings were quite unexpected-slight variations to Pathfinder?s parameters appeared to lead to substantial degradations in system performance. A careful look at our first analysis, together with the valuable feedback provided by the participants of last year?s conference, led us to conduct a follow-up study. Our follow-up differs from our initial study in two ways: (i) the probabilities 0.0 and 1.0 remained unchanged, and (ii) the variations to the probabilities that are close to both ends (0.0 or 1.0) were less than the ones close to the middle (0.5). The results of the follow-up study look more reasonable-slight variations to Pathfinder?s parameters now have little effect on its performance. Taken together, these two sets of results suggest a viable extension of a common decision analytic sensitivity analysis to the larger, more complex settings generally encountered in artificial intelligence.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge