A Scalable Continuous Unbounded Optimisation Benchmark Suite from Neural Network Regression

Paper and Code

Sep 12, 2021

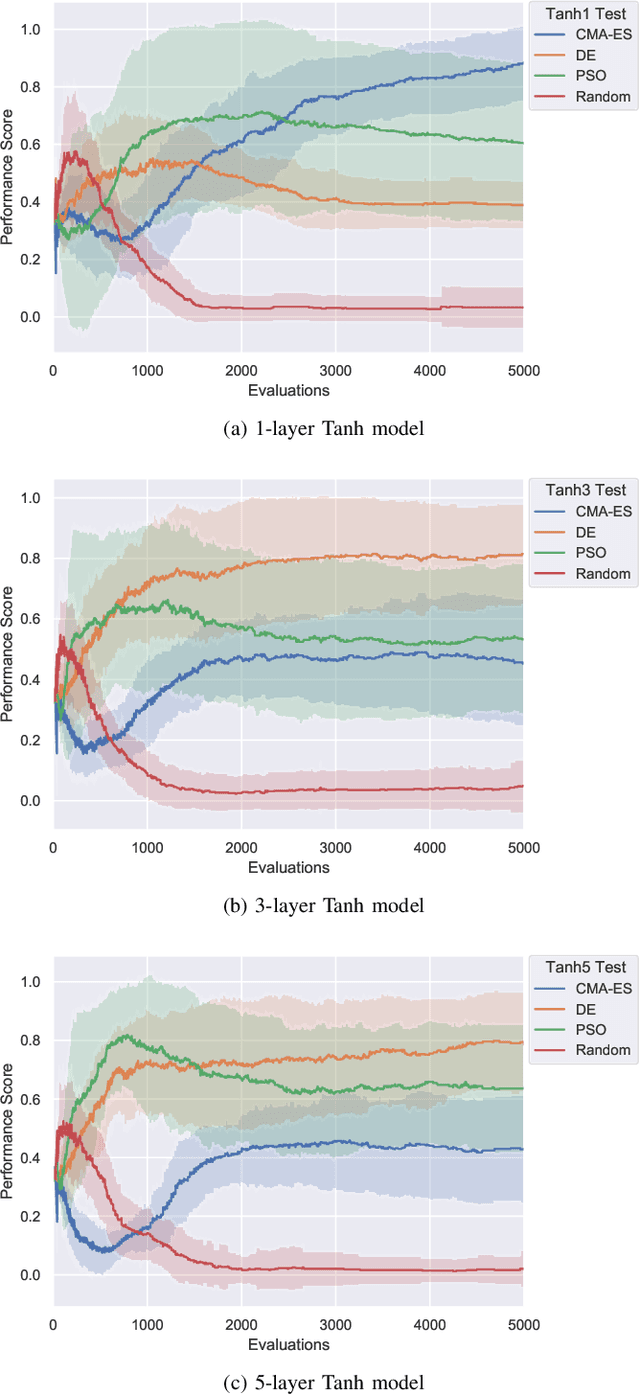

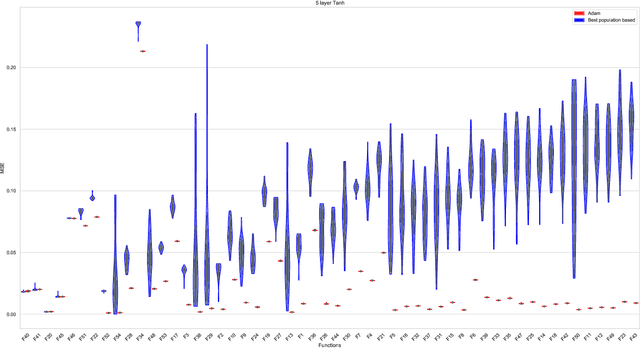

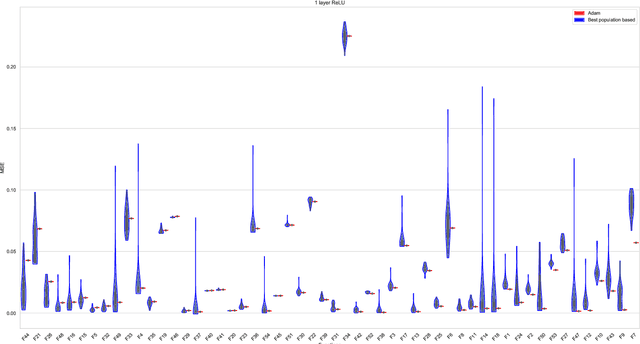

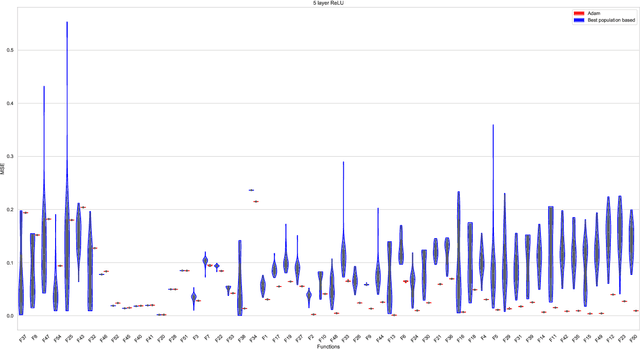

For the design of optimisation algorithms that perform well in general, it is necessary to experiment with and benchmark on a range of problems with diverse characteristics. The training of neural networks is an optimisation task that has gained prominence with the recent successes of deep learning. Although evolutionary algorithms have been used for training neural networks, gradient descent variants are by far the most common choice with their trusted good performance on large-scale machine learning tasks. With this paper we contribute CORNN (Continuous Optimisation of Regression tasks using Neural Networks), a large suite that can easily be used to benchmark the performance of any continuous black-box algorithm on neural network training problems. By employing different base regression functions and neural network architectures, problem instances with different dimensions and levels of difficulty can be created. We demonstrate the use of the CORNN Suite by comparing the performance of three evolutionary and swarm-based algorithms on a set of over 300 problem instances. With the exception of random search, we provide evidence of performance complementarity between the algorithms. As a baseline, results are also provided to contrast the performance of the best population-based algorithm against a gradient-based approach (Adam). The suite is shared as a public web repository to facilitate easy integration with existing benchmarking platforms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge