A Review of Intelligent Practices for Irrigation Prediction

Paper and Code

Dec 07, 2016

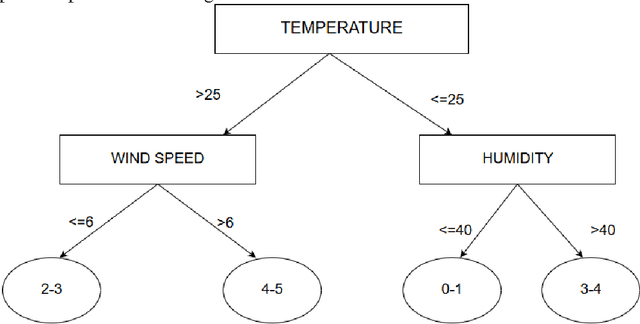

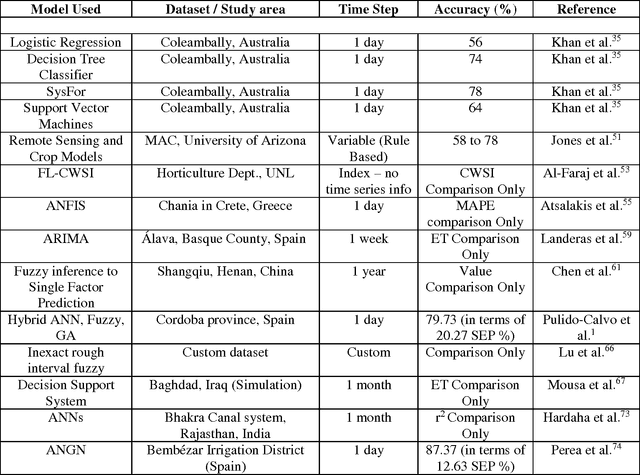

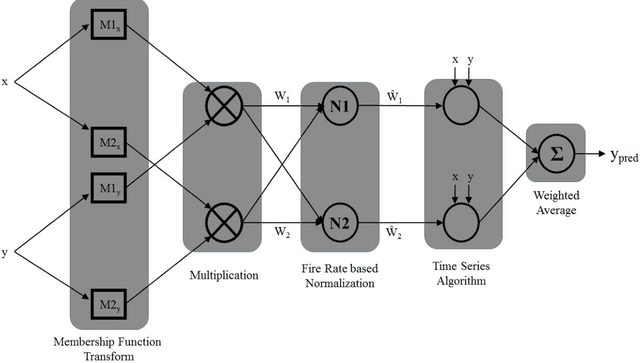

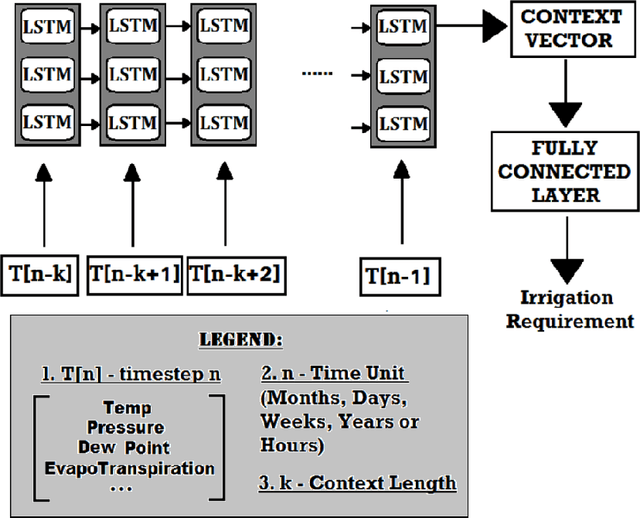

Population growth and increasing droughts are creating unprecedented strain on the continued availability of water resources. Since irrigation is a major consumer of fresh water, wastage of resources in this sector could have strong consequences. To address this issue, irrigation water management and prediction techniques need to be employed effectively and should be able to account for the variabilities present in the environment. The different techniques surveyed in this paper can be classified into two categories: computational and statistical. Computational methods deal with scientific correlations between physical parameters whereas statistical methods involve specific prediction algorithms that can be used to automate the process of irrigation water prediction. These algorithms interpret semantic relationships between the various parameters of temperature, pressure, evapotranspiration etc. and store them as numerical precomputed entities specific to the conditions and the area used as the data for the training corpus used to train it. We focus on reviewing the computational methods used to determine Evapotranspiration and its implications. We compare the efficiencies of different data mining and machine learning methods implemented in this area, such as Logistic Regression, Decision Tress Classifier, SysFor, Support Vector Machine(SVM), Fuzzy Logic techniques, Artifical Neural Networks(ANNs) and various hybrids of Genetic Algorithms (GA) applied to irrigation prediction. We also recommend a possible technique for the same based on its superior results in other such time series analysis tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge