A non-cooperative meta-modeling game for automated third-party calibrating, validating, and falsifying constitutive laws with parallelized adversarial attacks

Paper and Code

Apr 13, 2020

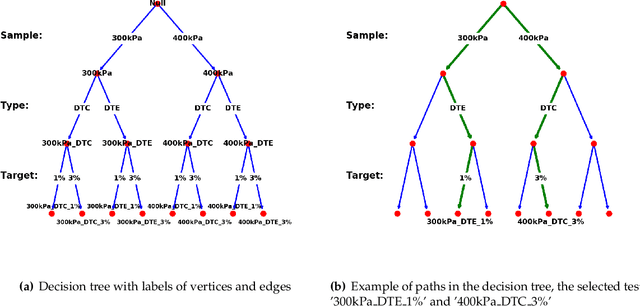

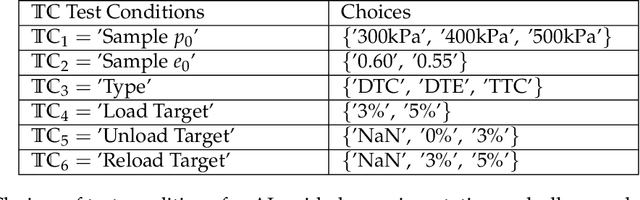

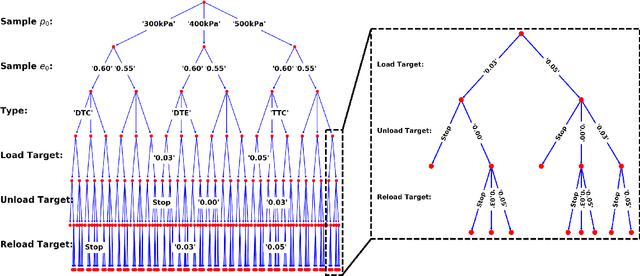

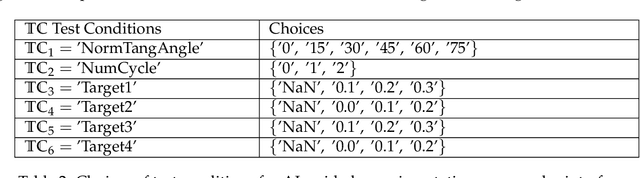

The evaluation of constitutive models, especially for high-risk and high-regret engineering applications, requires efficient and rigorous third-party calibration, validation and falsification. While there are numerous efforts to develop paradigms and standard procedures to validate models, difficulties may arise due to the sequential, manual and often biased nature of the commonly adopted calibration and validation processes, thus slowing down data collections, hampering the progress towards discovering new physics, increasing expenses and possibly leading to misinterpretations of the credibility and application ranges of proposed models. This work attempts to introduce concepts from game theory and machine learning techniques to overcome many of these existing difficulties. We introduce an automated meta-modeling game where two competing AI agents systematically generate experimental data to calibrate a given constitutive model and to explore its weakness, in order to improve experiment design and model robustness through competition. The two agents automatically search for the Nash equilibrium of the meta-modeling game in an adversarial reinforcement learning framework without human intervention. By capturing all possible design options of the laboratory experiments into a single decision tree, we recast the design of experiments as a game of combinatorial moves that can be resolved through deep reinforcement learning by the two competing players. Our adversarial framework emulates idealized scientific collaborations and competitions among researchers to achieve a better understanding of the application range of the learned material laws and prevent misinterpretations caused by conventional AI-based third-party validation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge