A new soft computing method for integration of expert's knowledge in reinforcement learn-ing problems

Paper and Code

Jun 13, 2021

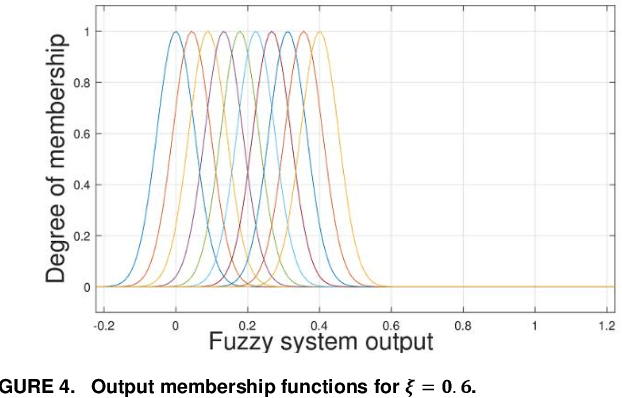

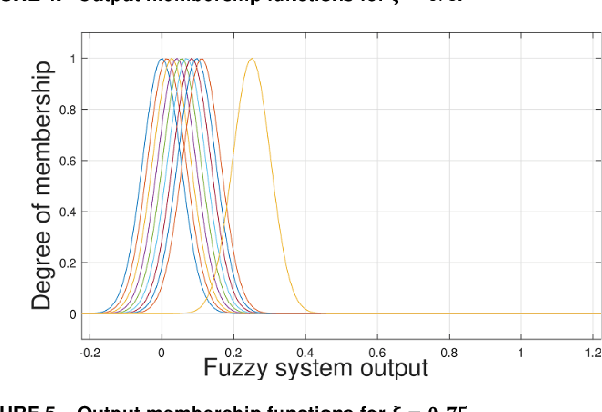

This paper proposes a novel fuzzy action selection method to leverage human knowledge in reinforcement learning problems. Based on the estimates of the most current action-state values, the proposed fuzzy nonlinear mapping as-signs each member of the action set to its probability of being chosen in the next step. A user tunable parameter is introduced to control the action selection policy, which determines the agent's greedy behavior throughout the learning process. This parameter resembles the role of the temperature parameter in the softmax action selection policy, but its tuning process can be more knowledge-oriented since this parameter reflects the human knowledge into the learning agent by making modifications in the fuzzy rule base. Simulation results indicate that including fuzzy logic within the reinforcement learning in the proposed manner improves the learning algorithm's convergence rate, and provides superior performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge