A Neurodynamic model of Saliency prediction in V1

Paper and Code

Dec 04, 2018

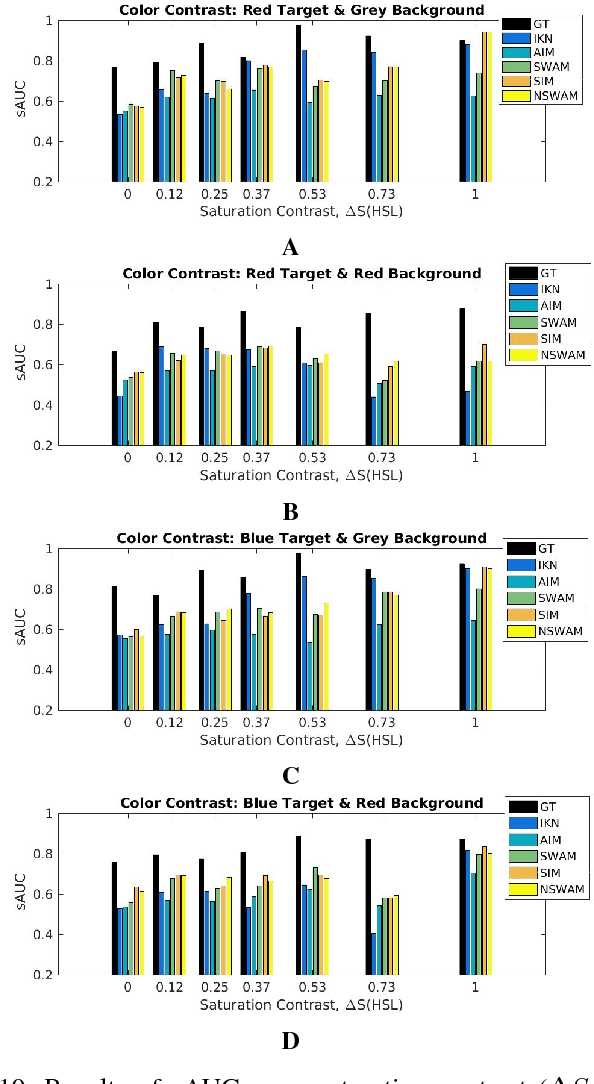

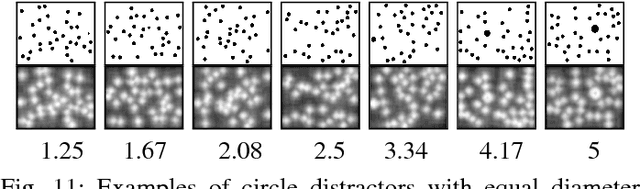

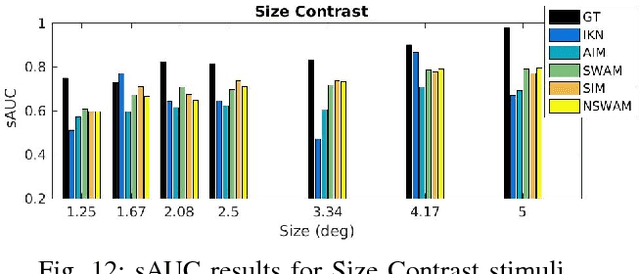

Computations in the primary visual cortex (area V1 or striate cortex) have long been hypothesized to be responsible, among several visual processing mechanisms, of bottom-up visual attention (also named saliency). In order to validate this hypothesis, images from eye tracking datasets are processed with a biologically plausible model of V1 able to reproduce other visual processes such as brightness, chromatic induction and visual discomfort. Following Li's neurodynamic model, we define V1's lateral connections with a network of firing rate neurons, sensitive to visual features such as brightness, color, orientation and scale. The resulting saliency maps are generated from the model output, representing the neuronal activity of V1 projections towards brain areas involved in eye movement control. Our predictions are supported with eye tracking experimentation and results show an improvement with respect to previous models as well as consistency with human psychophysics. We propose a unified computational architecture of the primary visual cortex that models several visual processes without applying any type of training or optimization and keeping the same parametrization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge