A Multi-Task Learning Approach for Human Action Detection and Ergonomics Risk Assessment

Paper and Code

Aug 07, 2020

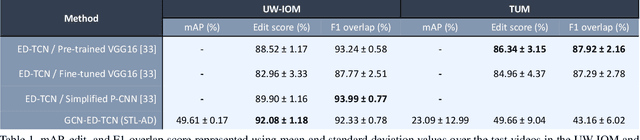

We propose a new approach to Human Action Evaluation (HAE) in long videos using graph-based multi-task modeling. Previous works in activity assessment either directly compute a metric using a detected skeleton or use the scene information to regress the activity score. These approaches are insufficient for accurate activity assessment since they only compute an average score over a clip, and do not consider the correlation between the joints and body dynamics. Moreover, they are highly scene-dependent which makes the generalizability of these methods questionable. We propose a novel multi-task framework for HAE that utilizes a Graph Convolutional Network backbone to embed the interconnection between human joints in the features. In this framework, we solve the Human Action Detection (HAD) problem as an auxiliary task to improve activity assessment. The HAD head is powered by an Encoder-Decoder Temporal Convolutional Network to detect activities in long videos and HAE uses a Long-Short-Term-Memory-based architecture. We evaluate our method on the UW-IOM and TUM Kitchen datasets and discuss the success and failure cases on these two datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge