A Methodology to Identify Cognition Gaps in Visual Recognition Applications Based on Convolutional Neural Networks

Paper and Code

Oct 05, 2021

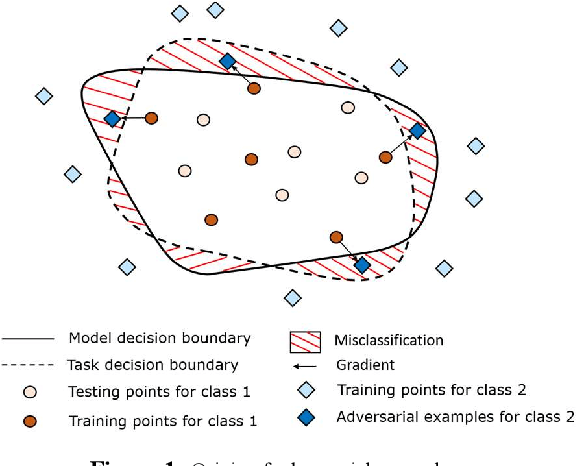

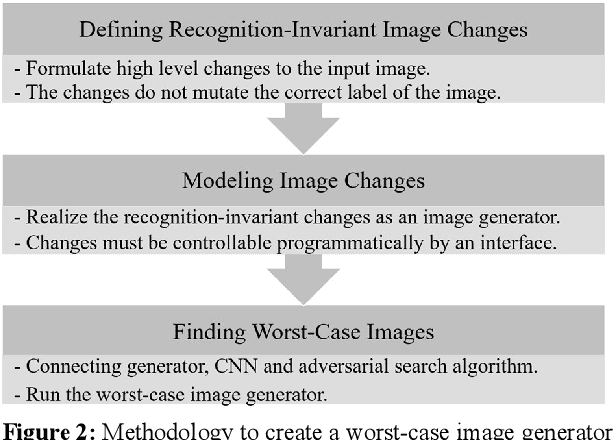

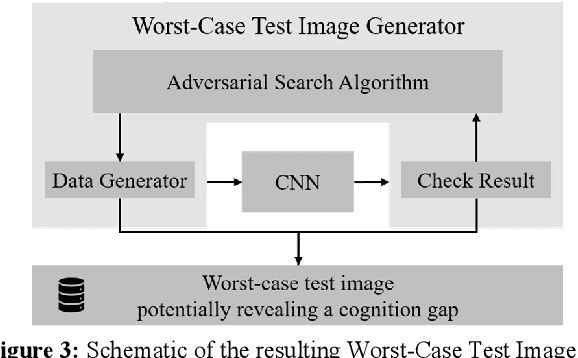

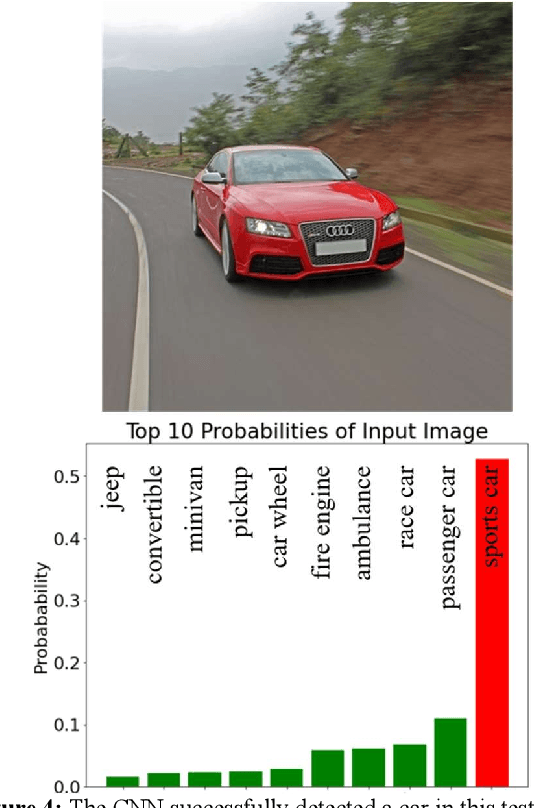

Developing consistently well performing visual recognition applications based on convolutional neural networks, e.g. for autonomous driving, is very challenging. One of the obstacles during the development is the opaqueness of their cognitive behaviour. A considerable amount of literature has been published which describes irrational behaviour of trained CNNs showcasing gaps in their cognition. In this paper, a methodology is presented that creates worstcase images using image augmentation techniques. If the CNN's cognitive performance on such images is weak while the augmentation techniques are supposedly harmless, a potential gap in the cognition has been found. The presented worst-case image generator is using adversarial search approaches to efficiently identify the most challenging image. This is evaluated with the well-known AlexNet CNN using images depicting a typical driving scenario.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge