A Mathematical Theory of Human Machine Teaming

Paper and Code

May 08, 2017

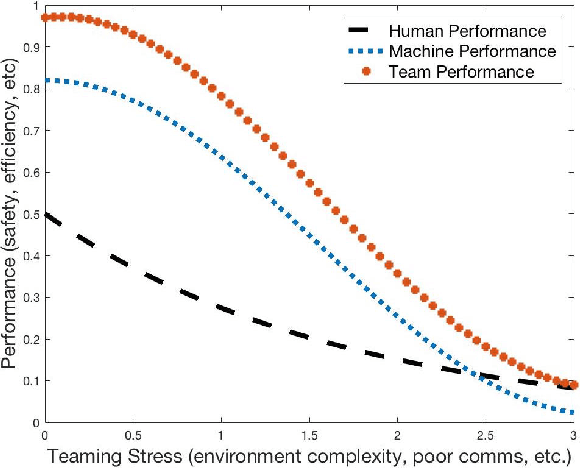

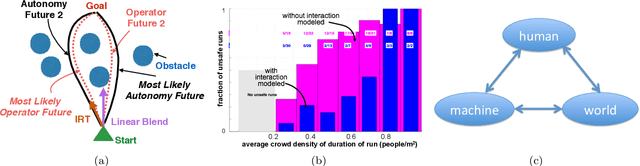

We begin with a disquieting paradox: human machine teaming (HMT) often produces results worse than either the human or machine would produce alone. Critically, this failure is not a result of inferior human modeling or a suboptimal autonomy: even with perfect knowledge of human intention and perfect autonomy performance, prevailing teaming architectures still fail under trivial stressors~\cite{trautman-smc-2015}. This failure is instead a result of deficiencies at the \emph{decision fusion level}. Accordingly, \emph{efforts aimed solely at improving human prediction or improving autonomous performance will not produce acceptable HMTs: we can no longer model humans, machines and adversaries as distinct entities.} We thus argue for a strong but essential condition: HMTs should perform no worse than either member of the team alone, and this performance bound should be independent of environment complexity, human-machine interfacing, accuracy of the human model, or reliability of autonomy or human decision making.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge