A Learning-based Optimal Market Bidding Strategy for Price-Maker Energy Storage

Paper and Code

Jun 04, 2021

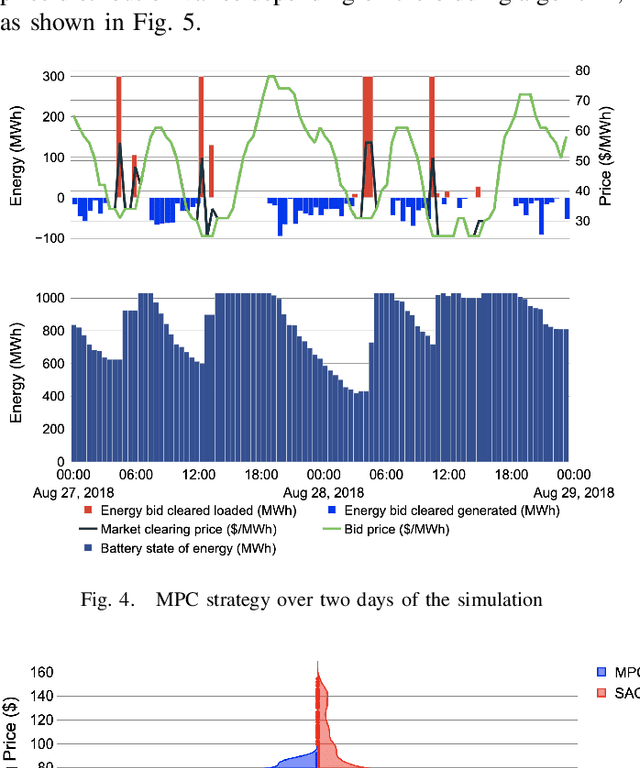

Load serving entities with storage units reach sizes and performances that can significantly impact clearing prices in electricity markets. Nevertheless, price endogeneity is rarely considered in storage bidding strategies and modeling the electricity market is a challenging task. Meanwhile, model-free reinforcement learning such as the Actor-Critic are becoming increasingly popular for designing energy system controllers. Yet implementation frequently requires lengthy, data-intense, and unsafe trial-and-error training. To fill these gaps, we implement an online Supervised Actor-Critic (SAC) algorithm, supervised with a model-based controller -- Model Predictive Control (MPC). The energy storage agent is trained with this algorithm to optimally bid while learning and adjusting to its impact on the market clearing prices. We compare the supervised Actor-Critic algorithm with the MPC algorithm as a supervisor, finding that the former reaps higher profits via learning. Our contribution, thus, is an online and safe SAC algorithm that outperforms the current model-based state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge