A Joint-Reasoning based Disease Q&A System

Paper and Code

Jan 06, 2024

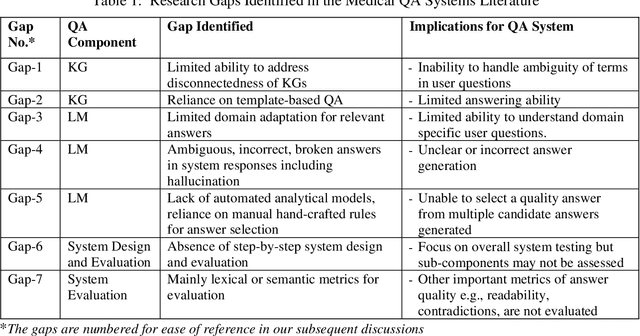

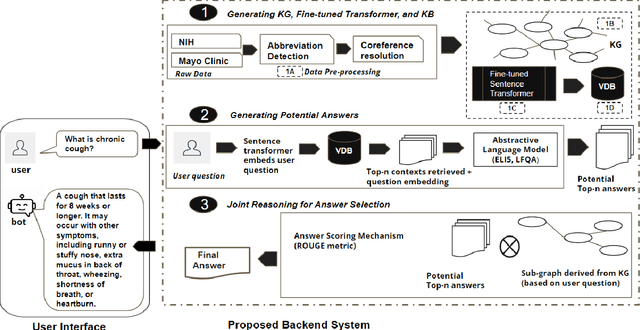

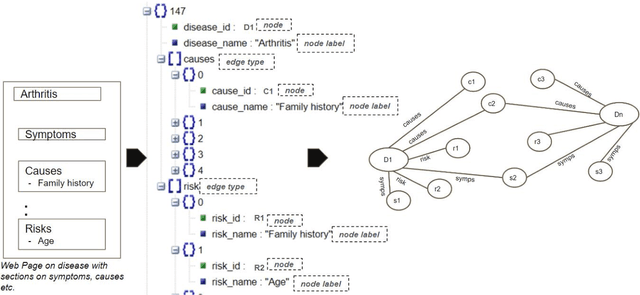

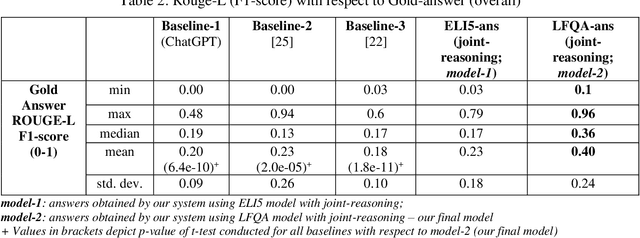

Medical question answer (QA) assistants respond to lay users' health-related queries by synthesizing information from multiple sources using natural language processing and related techniques. They can serve as vital tools to alleviate issues of misinformation, information overload, and complexity of medical language, thus addressing lay users' information needs while reducing the burden on healthcare professionals. QA systems, the engines of such assistants, have typically used either language models (LMs) or knowledge graphs (KG), though the approaches could be complementary. LM-based QA systems excel at understanding complex questions and providing well-formed answers, but are prone to factual mistakes. KG-based QA systems, which represent facts well, are mostly limited to answering short-answer questions with pre-created templates. While a few studies have jointly used LM and KG approaches for text-based QA, this was done to answer multiple-choice questions. Extant QA systems also have limitations in terms of automation and performance. We address these challenges by designing a novel, automated disease QA system which effectively utilizes both LM and KG techniques through a joint-reasoning approach to answer disease-related questions appropriate for lay users. Our evaluation of the system using a range of quality metrics demonstrates its efficacy over benchmark systems, including the popular ChatGPT.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge