A game-theoretic approach for Generative Adversarial Networks

Paper and Code

Mar 30, 2020

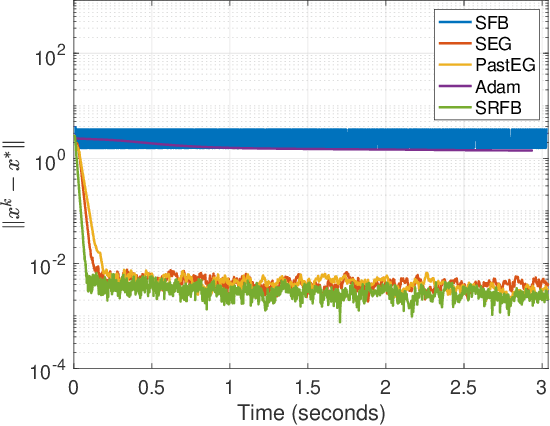

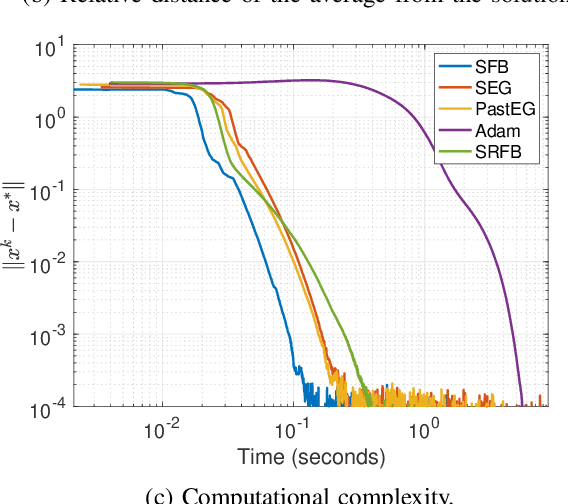

Generative adversarial networks (GANs) are a class of generative models, known for producing accurate samples. The key feature of GANs is that there are two antagonistic neural networks: the generator and the discriminator. The main bottleneck for their implementation is that the neural networks are very hard to train. One way to improve their performance is to design reliable algorithms for the adversarial process. Since the training can be cast as a stochastic Nash equilibrium problem, we rewrite it as a variational inequality and introduce an algorithm to compute an approximate solution. Specifically, we propose a stochastic relaxed forward-backward algorithm for GANs. We prove that when the pseudogradient mapping of the game is monotone, we have convergence to an exact solution or in a neighbourhood of it.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge