A Framework for Measuring Compositional Inductive Bias

Paper and Code

Mar 06, 2021

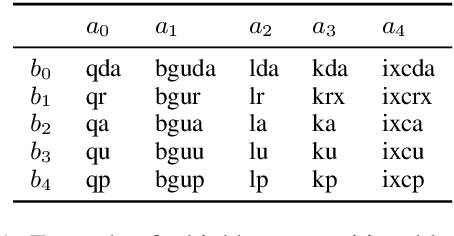

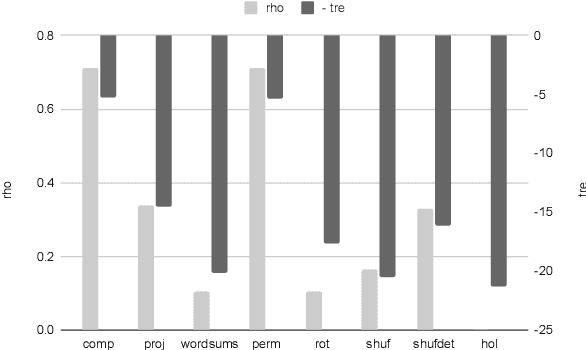

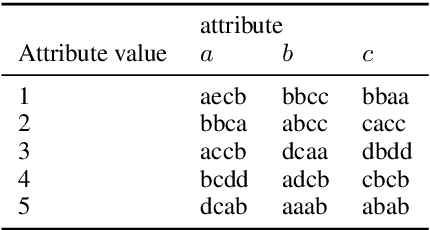

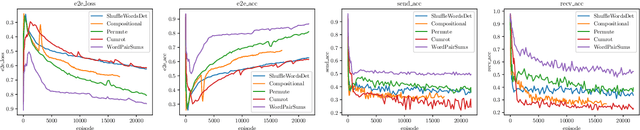

We present a framework for measuring the compositional inductive bias of models in the context of emergent communications. We devise corrupted compositional grammars that probe for limitations in the compositional inductive bias of frequently used models. We use these corrupted compositional grammars to compare and contrast a wide range of models, and to compare the choice of soft, Gumbel, and discrete representations. We propose a hierarchical model which might show an inductive bias towards relocatable atomic groups of tokens, thus potentially encouraging the emergence of words. We experiment with probing for the compositional inductive bias of sender and receiver networks in isolation, and also placed end-to-end, as an auto-encoder.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge