A Fiber Measurement System with Approximate Deconvolution Based on the Analysis of Fault Clusters in Linearized Bregman Iterations

Paper and Code

Nov 04, 2021

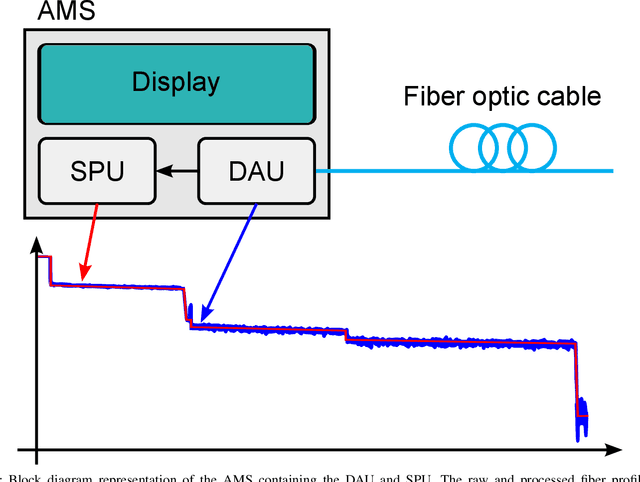

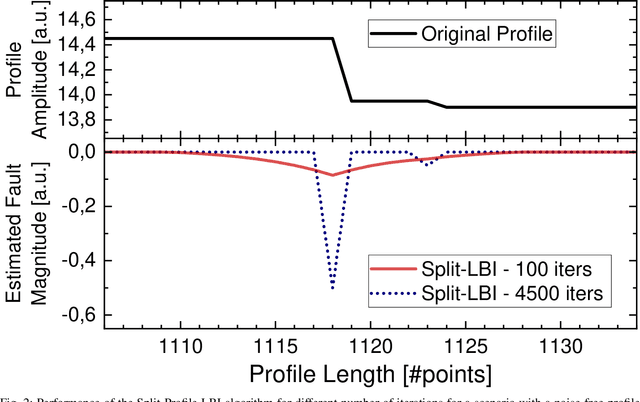

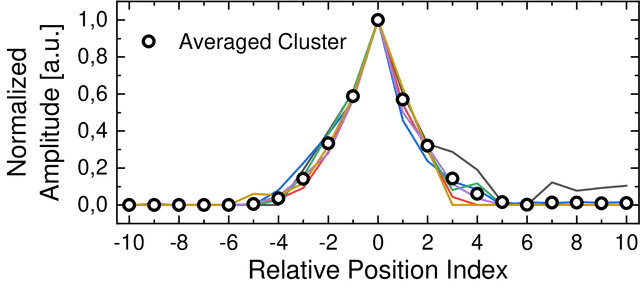

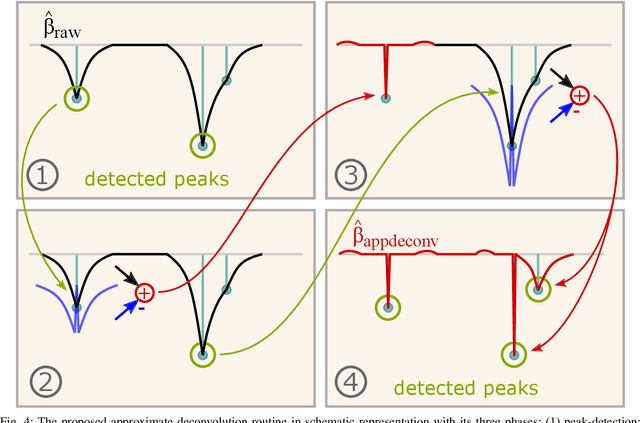

Automatic detection of faults in optical fibers is an active area of research that plays a significant role in the design of reliable and stable optical networks. A fiber measurement system that combines automated data acquisition and processing represents a disruptive impact in the management of optical fiber networks with fast and reliable event detection. It has been shown in the literature that the linearized Bregman iterations (LBI) algorithm and variations can be successfully used for processing and accurately identifying faults in a fiber profile. One of the factors that impact the performance of these algorithms is the degradation of spatial resolution, which is mainly caused by the appearance of fault clusters due to a reduced number of iterations. In this paper, a method is proposed based on an approximate deconvolution approach for increasing the spatial resolution, possible after a thorough analysis of fault clusters that appear in the algorithm's output. The effect of such approximate deconvolution is shown to extend beyond the improvement of spatial resolution, allowing for better performances to be reached at shorter processing times. An efficient hardware architecture that implements the approximate deconvolution, compatible with the hardware structure recently presented for the LBI algorithm, is also proposed and discussed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge