A Fast, Principled Working Set Algorithm for Exploiting Piecewise Linear Structure in Convex Problems

Paper and Code

Jul 20, 2018

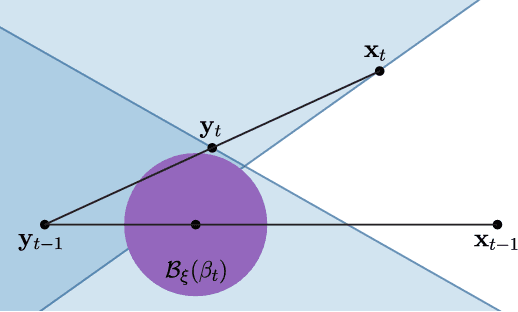

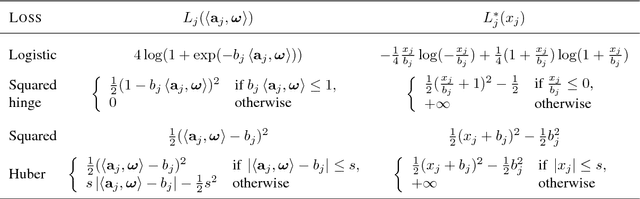

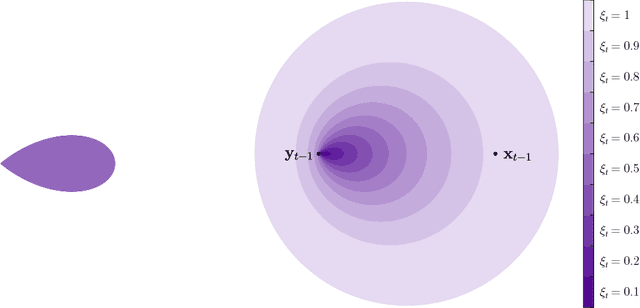

By reducing optimization to a sequence of smaller subproblems, working set algorithms achieve fast convergence times for many machine learning problems. Despite such performance, working set implementations often resort to heuristics to determine subproblem size, makeup, and stopping criteria. We propose BlitzWS, a working set algorithm with useful theoretical guarantees. Our theory relates subproblem size and stopping criteria to the amount of progress during each iteration. This result motivates strategies for optimizing algorithmic parameters and discarding irrelevant components as BlitzWS progresses toward a solution. BlitzWS applies to many convex problems, including training L1-regularized models and support vector machines. We showcase this versatility with empirical comparisons, which demonstrate BlitzWS is indeed a fast algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge