A differentiable short-time Fourier transform with respect to the window length

Paper and Code

Aug 25, 2022

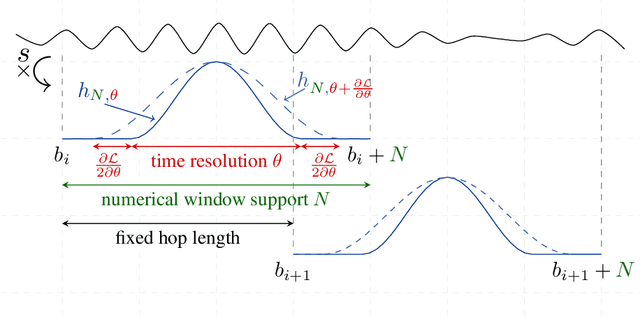

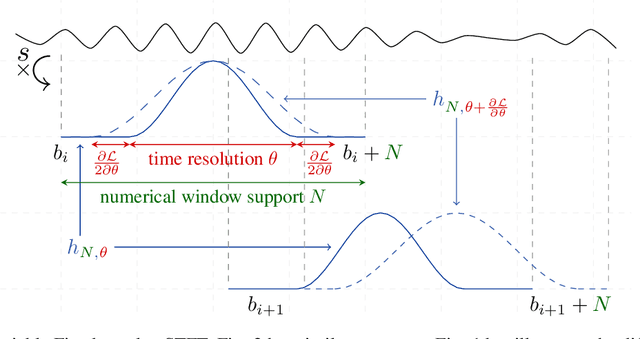

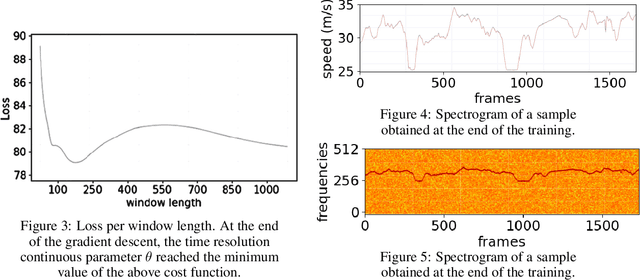

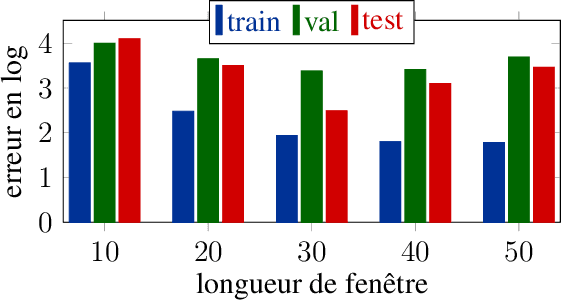

In this paper, we revisit the use of spectrograms in neural networks, by making the window length a continuous parameter optimizable by gradient descent instead of an empirically tuned integer-valued hyperparameter. The contribution is mostly theoretical at this point, but plugging the modified STFT into any existing neural network is straightforward. We first define a differentiable version of the STFT in the case where local bins centers are fixed and independent of the window length parameter. We then discuss the more difficult case where the window length affects the position and number of bins. We illustrate the benefits of this new tool on an estimation and a classification problems, showing it can be of interest not only to neural networks but to any STFT-based signal processing algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge