A convolutional neural-network model of human cochlear mechanics and filter tuning for real-time applications

Paper and Code

Apr 30, 2020

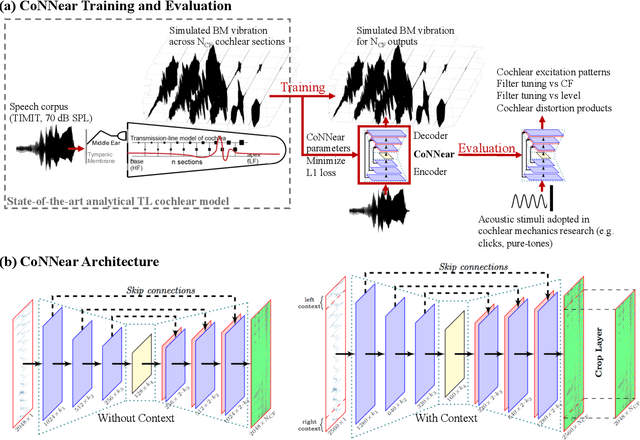

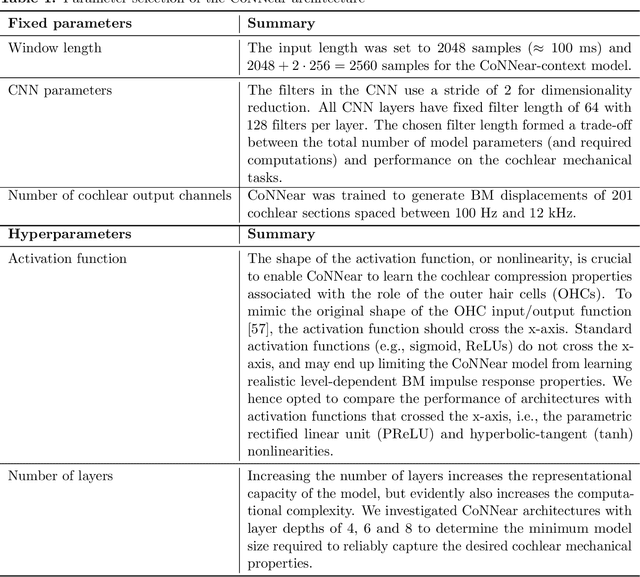

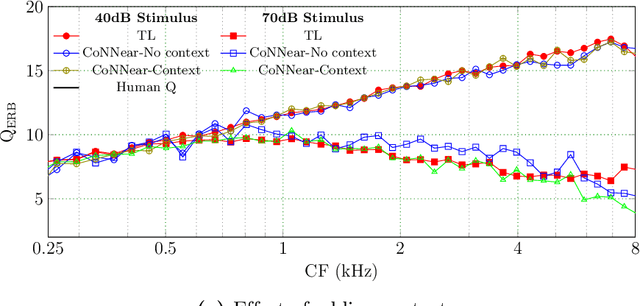

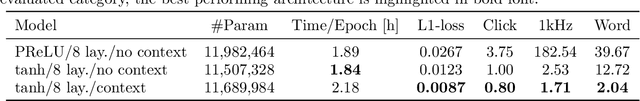

Auditory models are commonly used as feature extractors for automatic speech recognition systems or as front-ends for robotics, machine-hearing and hearing-aid applications. While over the years, auditory models have progressed to capture the biophysical and nonlinear properties of human hearing in great detail, these biophysical models are slow to compute and consequently not used in real-time applications. To enable an uptake, we present a hybrid approach where convolutional neural networks are combined with computational neuroscience to yield a real-time end-to-end model for human cochlear mechanics and level-dependent cochlear filter tuning (CoNNear). The CoNNear model was trained on acoustic speech material, but its performance and applicability evaluated using (unseen) sound stimuli common in cochlear mechanics research. The CoNNear model accurately simulates human frequency selectivity and its dependence on sound intensity, which is essential for our hallmark robust speech intelligibility performance, even at negative speech-to-background noise ratios. Because its architecture is based on real-time, parallel and differentiatable computations, the CoNNear model has the power to leverage real-time auditory applications towards human performance and can inspire the next generation of speech recognition, robotics and hearing-aid systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge