A Bi-level Nonlinear Eigenvector Algorithm for Wasserstein Discriminant Analysis

Paper and Code

Nov 21, 2022

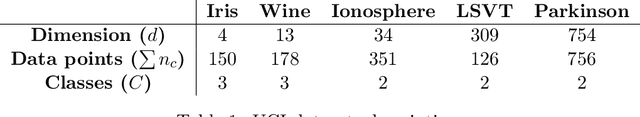

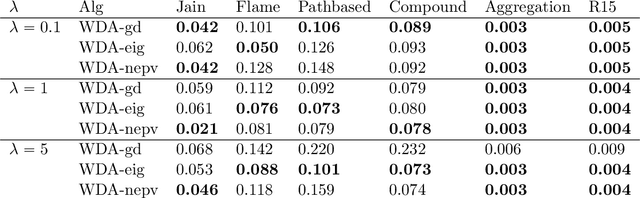

Much like the classical Fisher linear discriminant analysis, Wasserstein discriminant analysis (WDA) is a supervised linear dimensionality reduction method that seeks a projection matrix to maximize the dispersion of different data classes and minimize the dispersion of same data classes. However, in contrast, WDA can account for both global and local inter-connections between data classes using a regularized Wasserstein distance. WDA is formulated as a bi-level nonlinear trace ratio optimization. In this paper, we present a bi-level nonlinear eigenvector (NEPv) algorithm, called WDA-nepv. The inner kernel of WDA-nepv for computing the optimal transport matrix of the regularized Wasserstein distance is formulated as an NEPv, and meanwhile the outer kernel for the trace ratio optimization is also formulated as another NEPv. Consequently, both kernels can be computed efficiently via self-consistent-field iterations and modern solvers for linear eigenvalue problems. Comparing with the existing algorithms for WDA, WDA-nepv is derivative-free and surrogate-model-free. The computational efficiency and applications in classification accuracy of WDA-nepv are demonstrated using synthetic and real-life datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge