3D Human Pose Estimation with Siamese Equivariant Embedding

Paper and Code

Sep 19, 2018

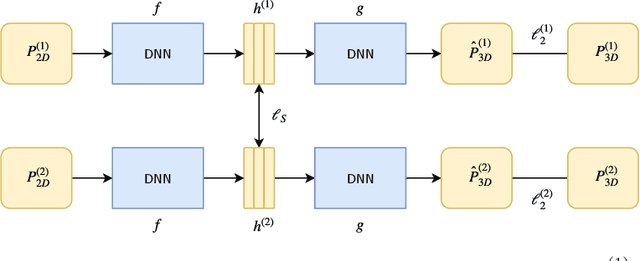

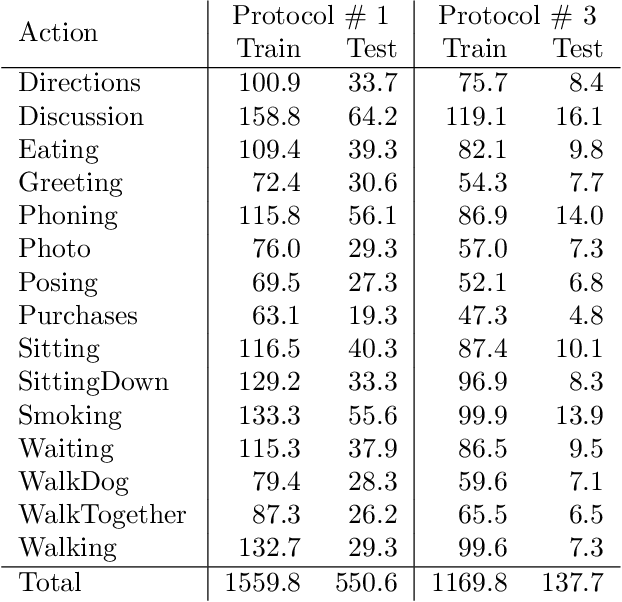

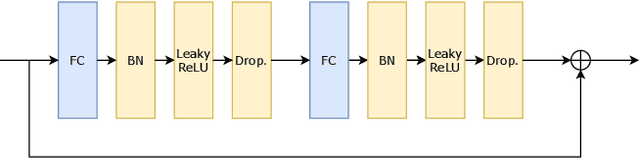

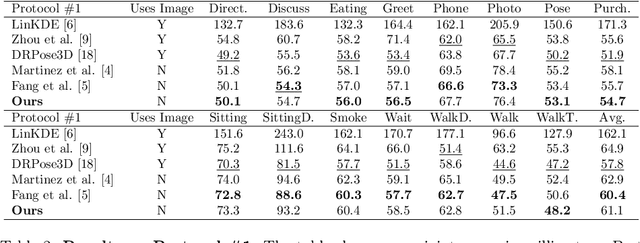

In monocular 3D human pose estimation a common setup is to first detect 2D positions and then lift the detection into 3D coordinates. Many algorithms suffer from overfitting to camera positions in the training set. We propose a siamese architecture that learns a rotation equivariant hidden representation to reduce the need for data augmentation. Our method is evaluated on multiple databases with different base networks and shows a consistent improvement of error metrics. It achieves state-of-the-art cross-camera error rate among algorithms that use estimated 2D joint coordinates only.

* 15 pages, 4 figures, submitted to Neurocomputing

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge