3D Fully Convolutional Neural Networks with Intersection Over Union Loss for Crop Mapping from Multi-Temporal Satellite Images

Paper and Code

Feb 15, 2021

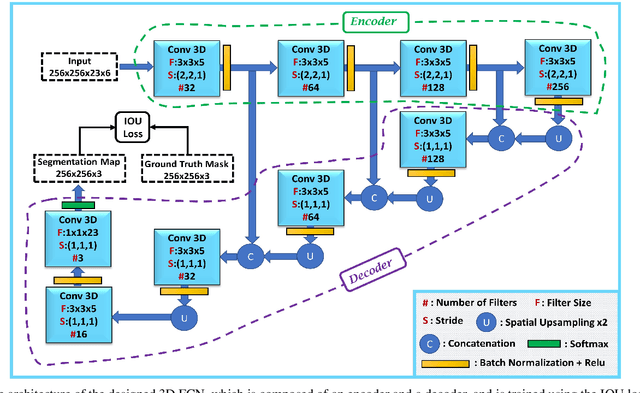

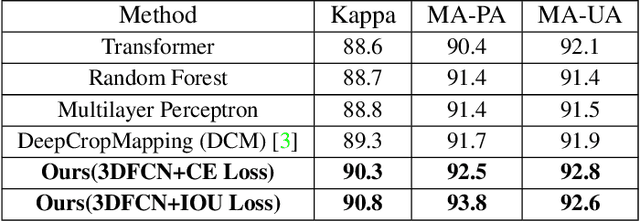

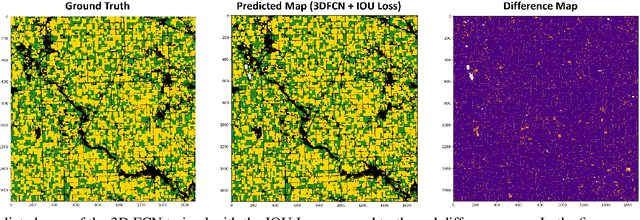

Information on cultivated crops is relevant for a large number of food security studies. Different scientific efforts are dedicated to generate this information from remote sensing images by means of machine learning methods. Unfortunately, these methods do not account for the spatial-temporal relationships inherent in remote sensing images. In our paper, we explore the capability of a 3D Fully Convolutional Neural Network (FCN) to map crop types from multi-temporal images. In addition, we propose the Intersection Over Union (IOU) loss function for increasing the overlap between the predicted classes and ground truth data. The proposed method was applied to identify soybean and corn from a study area situated in the US corn belt using multi-temporal Landsat images. The study shows that our method outperforms related methods, obtaining a Kappa coefficient of 90.8%. We conclude that using the IOU Loss function provides a superior choice to learn individual crop types.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge