Zahra Anvari

A Survey on Deep learning based Document Image Enhancement

Jan 03, 2022

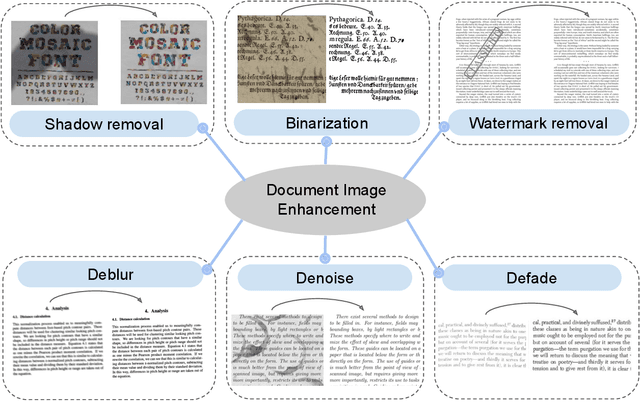

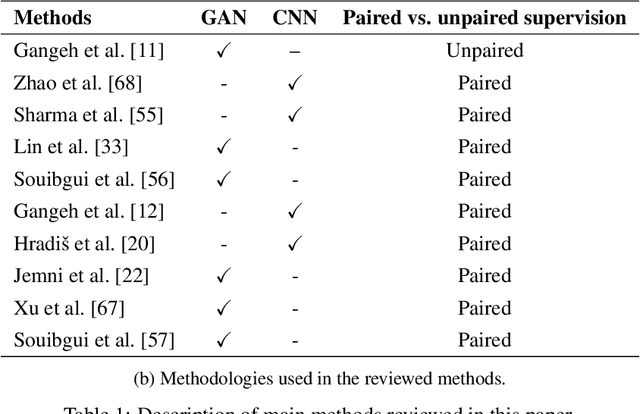

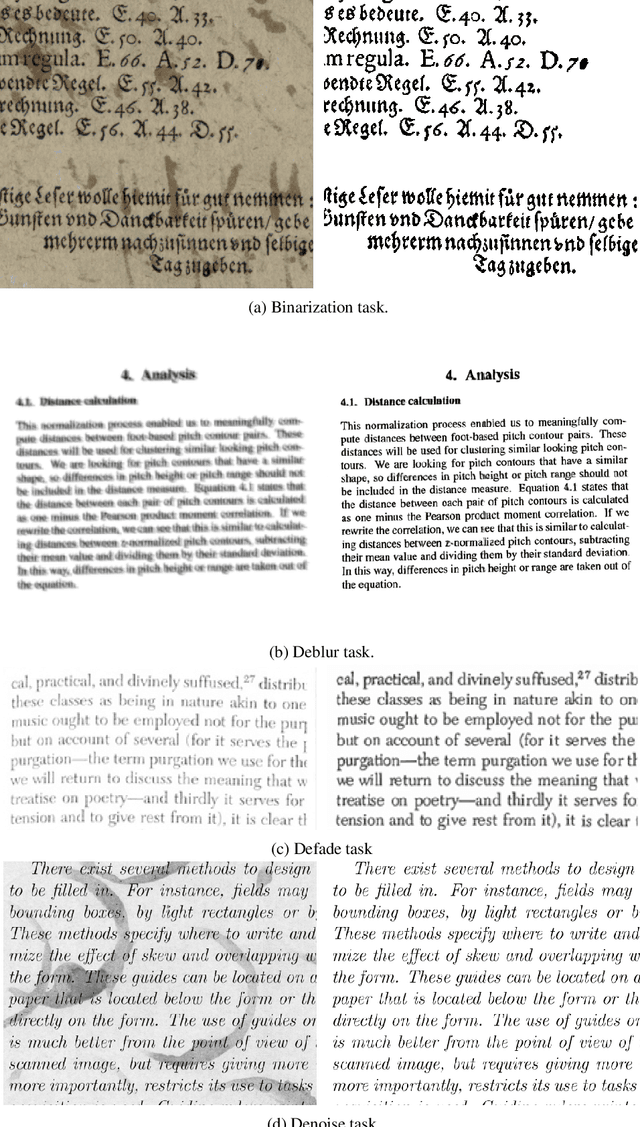

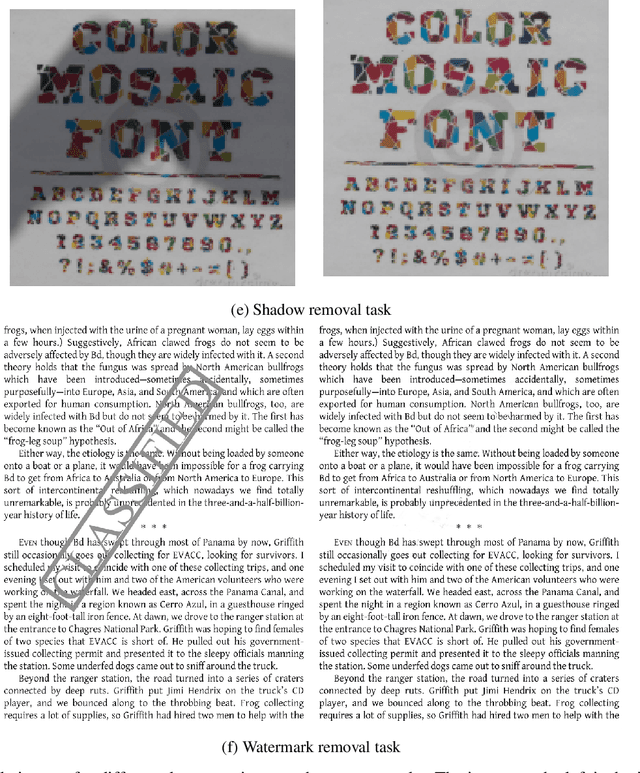

Abstract:Digitized documents such as scientific articles, tax forms, invoices, contract papers, historic texts are widely used nowadays. These document images could be degraded or damaged due to various reasons including poor lighting conditions, shadow, distortions like noise and blur, aging, ink stain, bleed-through, watermark, stamp, etc. Document image enhancement plays a crucial role as a pre-processing step in many automated document analysis and recognition tasks such as character recognition. With recent advances in deep learning, many methods are proposed to enhance the quality of these document images. In this paper, we review deep learning-based methods, datasets, and metrics for six main document image enhancement tasks, including binarization, debluring, denoising, defading, watermark removal, and shadow removal. We summarize the recent works for each task and discuss their features, challenges, and limitations. We introduce multiple document image enhancement tasks that have received little to no attention, including over and under exposure correction, super resolution, and bleed-through removal. We identify several promising research directions and opportunities for future research.

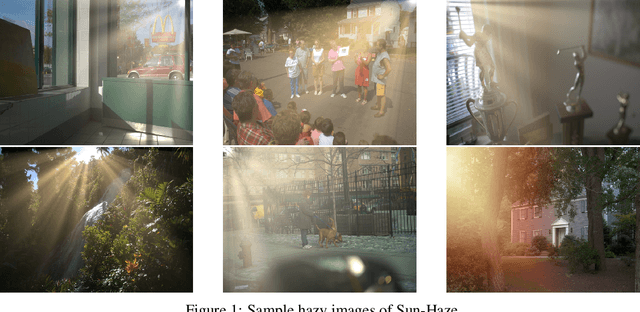

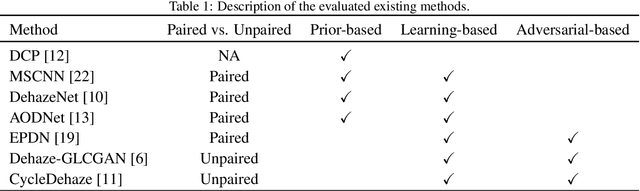

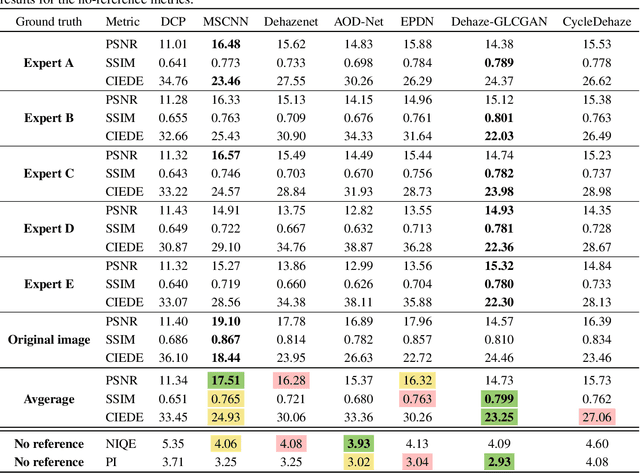

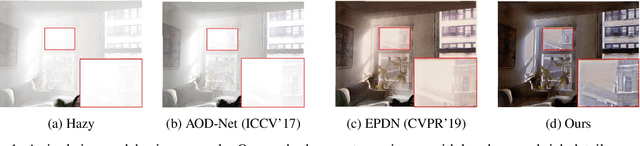

Evaluating Single Image Dehazing Methods Under Realistic Sunlight Haze

Aug 31, 2020

Abstract:Haze can degrade the visibility and the image quality drastically, thus degrading the performance of computer vision tasks such as object detection. Single image dehazing is a challenging and ill-posed problem, despite being widely studied. Most existing methods assume that haze has a uniform/homogeneous distribution and haze can have a single color, i.e. grayish white color similar to smoke, while in reality haze can be distributed non-uniformly with different patterns and colors. In this paper, we focus on haze created by sunlight as it is one of the most prevalent type of haze in the wild. Sunlight can generate non-uniformly distributed haze with drastic density changes due to sun rays and also a spectrum of haze color due to sunlight color changes during the day. This presents a new challenge to image dehazing methods. For these methods to be practical, this problem needs to be addressed. To quantify the challenges and assess the performance of these methods, we present a sunlight haze benchmark dataset, Sun-Haze, containing 107 hazy images with different types of haze created by sunlight having a variety of intensity and color. We evaluate a representative set of state-of-the-art image dehazing methods on this benchmark dataset in terms of standard metrics such as PSNR, SSIM, CIEDE2000, PI and NIQE. This uncovers the limitation of the current methods, and questions their underlying assumptions as well as their practicality.

Dehaze-GLCGAN: Unpaired Single Image De-hazing via Adversarial Training

Aug 15, 2020

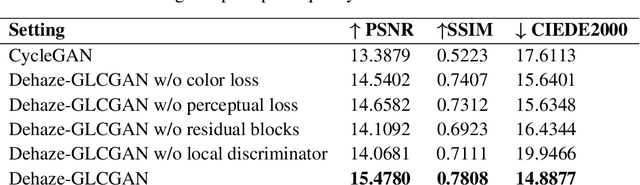

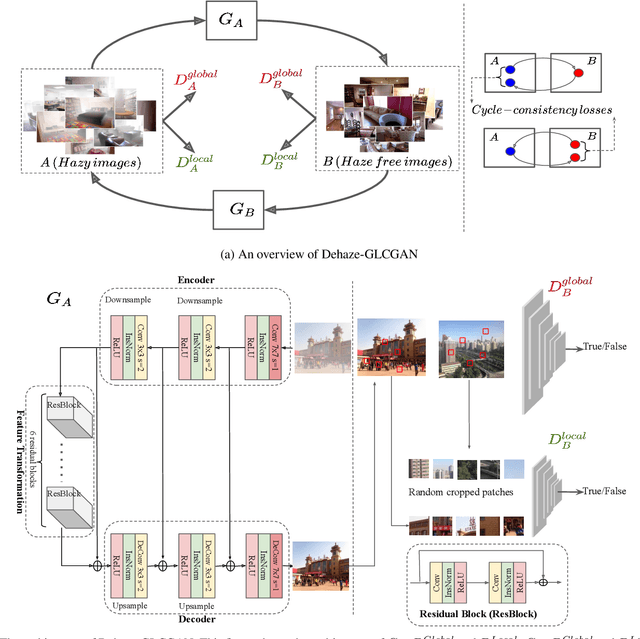

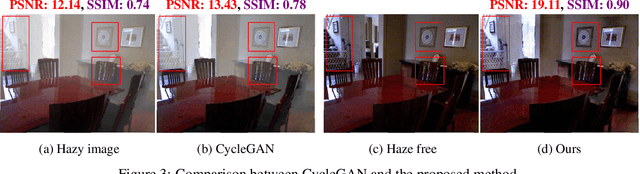

Abstract:Single image de-hazing is a challenging problem, and it is far from solved. Most current solutions require paired image datasets that include both hazy images and their corresponding haze-free ground-truth images. However, in reality, lighting conditions and other factors can produce a range of haze-free images that can serve as ground truth for a hazy image, and a single ground truth image cannot capture that range. This limits the scalability and practicality of paired image datasets in real-world applications. In this paper, we focus on unpaired single image de-hazing and we do not rely on the ground truth image or physical scattering model. We reduce the image de-hazing problem to an image-to-image translation problem and propose a dehazing Global-Local Cycle-consistent Generative Adversarial Network (Dehaze-GLCGAN). Generator network of Dehaze-GLCGAN combines an encoder-decoder architecture with residual blocks to better recover the haze free scene. We also employ a global-local discriminator structure to deal with spatially varying haze. Through ablation study, we demonstrate the effectiveness of different factors in the performance of the proposed network. Our extensive experiments over three benchmark datasets show that our network outperforms previous work in terms of PSNR and SSIM while being trained on smaller amount of data compared to other methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge