Yosuke Miyanishi

Superficial Consciousness Hypothesis for Autoregressive Transformers

Dec 10, 2024Abstract:The alignment between human objectives and machine learning models built on these objectives is a crucial yet challenging problem for achieving Trustworthy AI, particularly when preparing for superintelligence (SI). First, given that SI does not exist today, empirical analysis for direct evidence is difficult. Second, SI is assumed to be more intelligent than humans, capable of deceiving us into underestimating its intelligence, making output-based analysis unreliable. Lastly, what kind of unexpected property SI might have is still unclear. To address these challenges, we propose the Superficial Consciousness Hypothesis under Information Integration Theory (IIT), suggesting that SI could exhibit a complex information-theoretic state like a conscious agent while unconscious. To validate this, we use a hypothetical scenario where SI can update its parameters "at will" to achieve its own objective (mesa-objective) under the constraint of the human objective (base objective). We show that a practical estimate of IIT's consciousness metric is relevant to the widely used perplexity metric, and train GPT-2 with those two objectives. Our preliminary result suggests that this SI-simulating GPT-2 could simultaneously follow the two objectives, supporting the feasibility of the Superficial Consciousness Hypothesis.

Multimodal Contrastive In-Context Learning

Aug 23, 2024

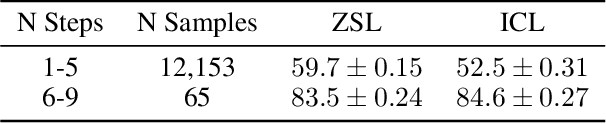

Abstract:The rapid growth of Large Language Models (LLMs) usage has highlighted the importance of gradient-free in-context learning (ICL). However, interpreting their inner workings remains challenging. This paper introduces a novel multimodal contrastive in-context learning framework to enhance our understanding of ICL in LLMs. First, we present a contrastive learning-based interpretation of ICL in real-world settings, marking the distance of the key-value representation as the differentiator in ICL. Second, we develop an analytical framework to address biases in multimodal input formatting for real-world datasets. We demonstrate the effectiveness of ICL examples where baseline performance is poor, even when they are represented in unseen formats. Lastly, we propose an on-the-fly approach for ICL (Anchored-by-Text ICL) that demonstrates effectiveness in detecting hateful memes, a task where typical ICL struggles due to resource limitations. Extensive experiments on multimodal datasets reveal that our approach significantly improves ICL performance across various scenarios, such as challenging tasks and resource-constrained environments. Moreover, it provides valuable insights into the mechanisms of in-context learning in LLMs. Our findings have important implications for developing more interpretable, efficient, and robust multimodal AI systems, especially in challenging tasks and resource-constrained environments.

Causal Intersectionality and Dual Form of Gradient Descent for Multimodal Analysis: a Case Study on Hateful Memes

Aug 19, 2023Abstract:In the wake of the explosive growth of machine learning (ML) usage, particularly within the context of emerging Large Language Models (LLMs), comprehending the semantic significance rooted in their internal workings is crucial. While causal analyses focus on defining semantics and its quantification, the gradient-based approach is central to explainable AI (XAI), tackling the interpretation of the black box. By synergizing these approaches, the exploration of how a model's internal mechanisms illuminate its causal effect has become integral for evidence-based decision-making. A parallel line of research has revealed that intersectionality - the combinatory impact of multiple demographics of an individual - can be structured in the form of an Averaged Treatment Effect (ATE). Initially, this study illustrates that the hateful memes detection problem can be formulated as an ATE, assisted by the principles of intersectionality, and that a modality-wise summarization of gradient-based attention attribution scores can delineate the distinct behaviors of three Transformerbased models concerning ATE. Subsequently, we show that the latest LLM LLaMA2 has the ability to disentangle the intersectional nature of memes detection in an in-context learning setting, with their mechanistic properties elucidated via meta-gradient, a secondary form of gradient. In conclusion, this research contributes to the ongoing dialogue surrounding XAI and the multifaceted nature of ML models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge