Xiyao Qu

Robust Bayesian optimization with reinforcement learned acquisition functions

Oct 02, 2022

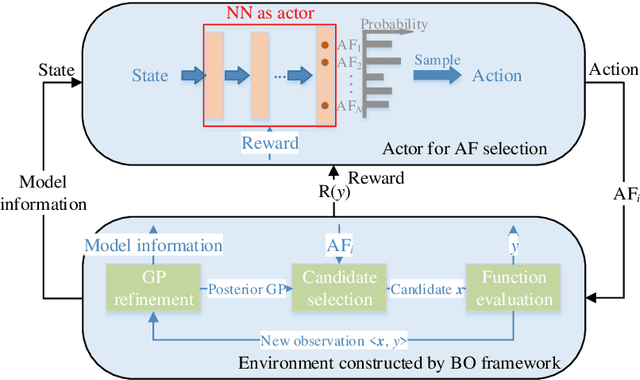

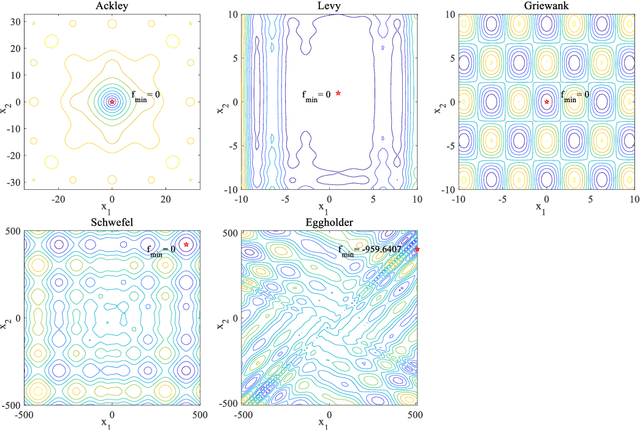

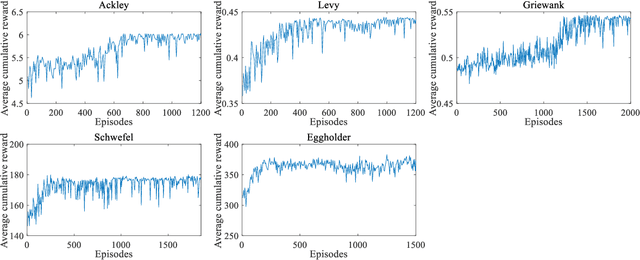

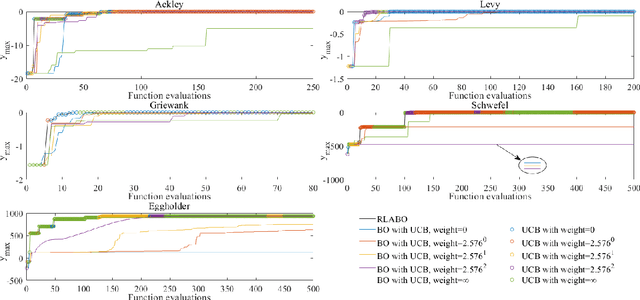

Abstract:In Bayesian optimization (BO) for expensive black-box optimization tasks, acquisition function (AF) guides sequential sampling and plays a pivotal role for efficient convergence to better optima. Prevailing AFs usually rely on artificial experiences in terms of preferences for exploration or exploitation, which runs a risk of a computational waste or traps in local optima and resultant re-optimization. To address the crux, the idea of data-driven AF selection is proposed, and the sequential AF selection task is further formalized as a Markov decision process (MDP) and resort to powerful reinforcement learning (RL) technologies. Appropriate selection policy for AFs is learned from superior BO trajectories to balance between exploration and exploitation in real time, which is called reinforcement-learning-assisted Bayesian optimization (RLABO). Competitive and robust BO evaluations on five benchmark problems demonstrate RL's recognition of the implicit AF selection pattern and imply the proposal's potential practicality for intelligent AF selection as well as efficient optimization in expensive black-box problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge