Weikun Wu

SK-Net: Deep Learning on Point Cloud via End-to-end Discovery of Spatial Keypoints

Mar 31, 2020

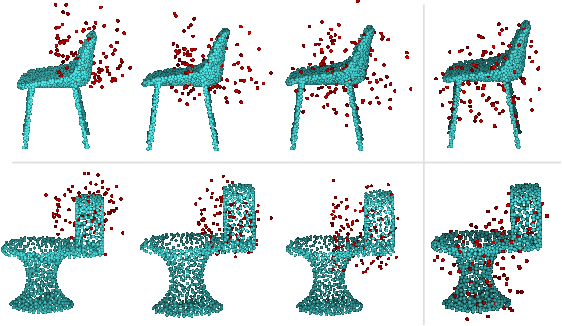

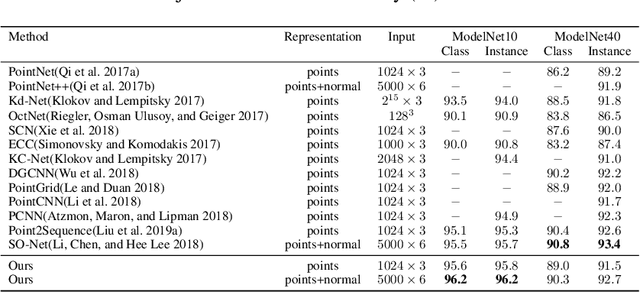

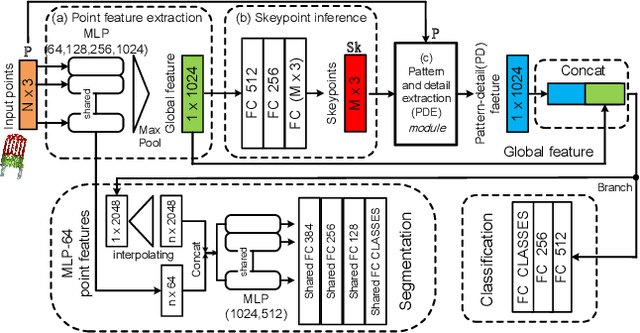

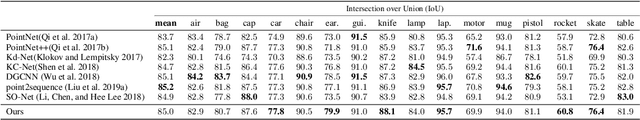

Abstract:Since the PointNet was proposed, deep learning on point cloud has been the concentration of intense 3D research. However, existing point-based methods usually are not adequate to extract the local features and the spatial pattern of a point cloud for further shape understanding. This paper presents an end-to-end framework, SK-Net, to jointly optimize the inference of spatial keypoint with the learning of feature representation of a point cloud for a specific point cloud task. One key process of SK-Net is the generation of spatial keypoints (Skeypoints). It is jointly conducted by two proposed regulating losses and a task objective function without knowledge of Skeypoint location annotations and proposals. Specifically, our Skeypoints are not sensitive to the location consistency but are acutely aware of shape. Another key process of SK-Net is the extraction of the local structure of Skeypoints (detail feature) and the local spatial pattern of normalized Skeypoints (pattern feature). This process generates a comprehensive representation, pattern-detail (PD) feature, which comprises the local detail information of a point cloud and reveals its spatial pattern through the part district reconstruction on normalized Skeypoints. Consequently, our network is prompted to effectively understand the correlation between different regions of a point cloud and integrate contextual information of the point cloud. In point cloud tasks, such as classification and segmentation, our proposed method performs better than or comparable with the state-of-the-art approaches. We also present an ablation study to demonstrate the advantages of SK-Net.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge