Uthman Baroudi

Intelligent Road Anomaly Detection with Real-time Notification System for Enhanced Road Safety

May 13, 2025Abstract:This study aims to improve transportation safety, especially traffic safety. Road damage anomalies such as potholes and cracks have emerged as a significant and recurring cause for accidents. To tackle this problem and improve road safety, a comprehensive system has been developed to detect potholes, cracks (e.g. alligator, transverse, longitudinal), classify their sizes, and transmit this data to the cloud for appropriate action by authorities. The system also broadcasts warning signals to nearby vehicles warning them if a severe anomaly is detected on the road. Moreover, the system can count road anomalies in real-time. It is emulated through the utilization of Raspberry Pi, a camera module, deep learning model, laptop, and cloud service. Deploying this innovative solution aims to proactively enhance road safety by notifying relevant authorities and drivers about the presence of potholes and cracks to take actions, thereby mitigating potential accidents arising from this prevalent road hazard leading to safer road conditions for the whole community.

Enhancing Pothole Detection and Characterization: Integrated Segmentation and Depth Estimation in Road Anomaly Systems

Apr 18, 2025

Abstract:Road anomaly detection plays a crucial role in road maintenance and in enhancing the safety of both drivers and vehicles. Recent machine learning approaches for road anomaly detection have overcome the tedious and time-consuming process of manual analysis and anomaly counting; however, they often fall short in providing a complete characterization of road potholes. In this paper, we leverage transfer learning by adopting a pre-trained YOLOv8-seg model for the automatic characterization of potholes using digital images captured from a dashboard-mounted camera. Our work includes the creation of a novel dataset, comprising both images and their corresponding depth maps, collected from diverse road environments in Al-Khobar city and the KFUPM campus in Saudi Arabia. Our approach performs pothole detection and segmentation to precisely localize potholes and calculate their area. Subsequently, the segmented image is merged with its depth map to extract detailed depth information about the potholes. This integration of segmentation and depth data offers a more comprehensive characterization compared to previous deep learning-based road anomaly detection systems. Overall, this method not only has the potential to significantly enhance autonomous vehicle navigation by improving the detection and characterization of road hazards but also assists road maintenance authorities in responding more effectively to road damage.

Cloud-Based Autonomous Indoor Navigation: A Case Study

Feb 21, 2019

Abstract:In this case study, we design, integrate and implement a cloud-enabled autonomous robotic navigation system. The system has the following features: map generation and robot coordination via cloud service and video streaming to allow online monitoring and control in case of emergency. The system has been tested to generate a map for a long corridor using two modes: manual and autonomous. The autonomous mode has shown more accurate map. In addition, the field experiments confirm the benefit of offloading the heavy computation to the cloud by significantly shortening the time required to build the map.

A Framework for Autonomous Robot Deployment with Perfect Demand Satisfaction using Virtual Forces

Feb 08, 2019

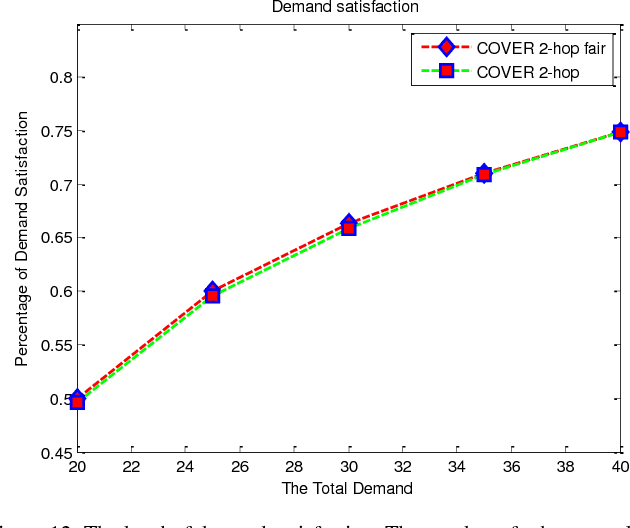

Abstract:In many applications, robots autonomous deployment is preferable and sometimes it is the only affordable solution. To address this issue, virtual force (VF) is one of the prominent approaches to performing multirobot deployment autonomously. However, most of the existing VF-based approaches consider only a uniform deployment to maximize the covered area while ignoring the criticality of specific locations during the deployment process. To overcome these limitations, we present a framework for autonomously deploy robots or vehicles using virtual force. The framework is composed of two stages. In the first stage, a two-hop Cooperative Virtual Force based Robots Deployment (Two-hop COVER) is employed where a cooperative relation between robots and neighboring landmarks is established to satisfy mission requirements. The second stage complements the first stage and ensures perfect demand satisfaction by utilizing the Trace Fingerprint technique which collected traces while each robot traversing the deployment area. Finally, a fairness-aware version of Two-hop COVER is presented to consider scenarios where the mission requirements are greater than the available resources (i.e. robots). We evaluate our framework via extensive simulations. The results demonstrate outstanding performance compared to contemporary approaches in terms of total travelled distance, total exchanged messages, total deployment time, and Jain fairness index.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge