Troy McMahon

A Survey on the Integration of Machine Learning with Sampling-based Motion Planning

Nov 15, 2022

Abstract:Sampling-based methods are widely adopted solutions for robot motion planning. The methods are straightforward to implement, effective in practice for many robotic systems. It is often possible to prove that they have desirable properties, such as probabilistic completeness and asymptotic optimality. Nevertheless, they still face challenges as the complexity of the underlying planning problem increases, especially under tight computation time constraints, which impact the quality of returned solutions or given inaccurate models. This has motivated machine learning to improve the computational efficiency and applicability of Sampling-Based Motion Planners (SBMPs). This survey reviews such integrative efforts and aims to provide a classification of the alternative directions that have been explored in the literature. It first discusses how learning has been used to enhance key components of SBMPs, such as node sampling, collision detection, distance or nearest neighbor computation, local planning, and termination conditions. Then, it highlights planners that use learning to adaptively select between different implementations of such primitives in response to the underlying problem's features. It also covers emerging methods, which build complete machine learning pipelines that reflect the traditional structure of SBMPs. It also discusses how machine learning has been used to provide data-driven models of robots, which can then be used by a SBMP. Finally, it provides a comparative discussion of the advantages and disadvantages of the approaches covered, and insights on possible future directions of research. An online version of this survey can be found at: https://prx-kinodynamic.github.io/

* First two authors contributed equally

Data-Efficient Learning of High-Quality Controls for Kinodynamic Planning used in Vehicular Navigation

Jan 06, 2022

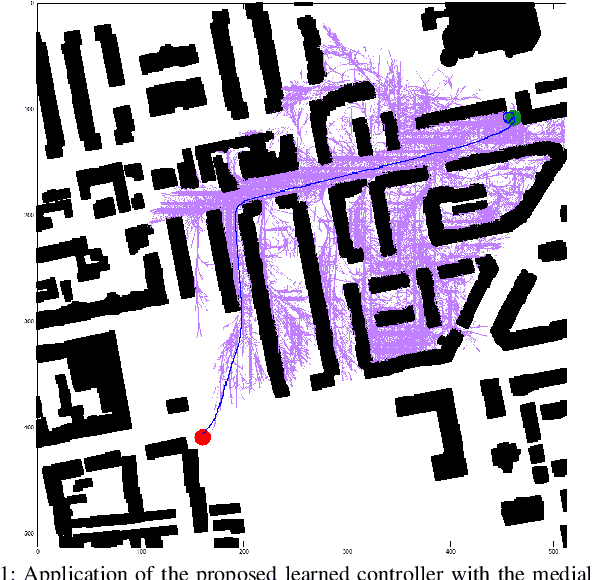

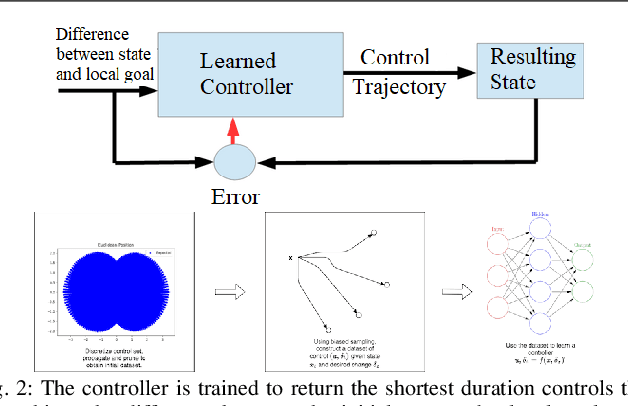

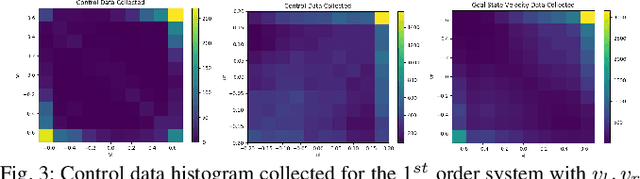

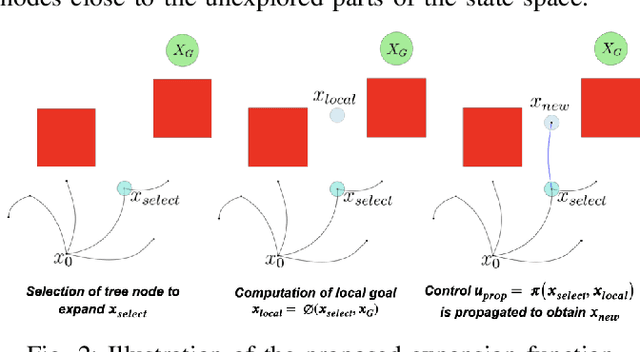

Abstract:This paper aims to improve the path quality and computational efficiency of kinodynamic planners used for vehicular systems. It proposes a learning framework for identifying promising controls during the expansion process of sampling-based motion planners for systems with dynamics. Offline, the learning process is trained to return the highest-quality control that reaches a local goal state (i.e., a waypoint) in the absence of obstacles from an input difference vector between its current state and a local goal state. The data generation scheme provides bounds on the target dispersion and uses state space pruning to ensure high-quality controls. By focusing on the system's dynamics, this process is data efficient and takes place once for a dynamical system, so that it can be used for different environments with modular expansion functions. This work integrates the proposed learning process with a) an exploratory expansion function that generates waypoints with biased coverage over the reachable space, and b) proposes an exploitative expansion function for mobile robots, which generates waypoints using medial axis information. This paper evaluates the learning process and the corresponding planners for a first and second-order differential drive systems. The results show that the proposed integration of learning and planning can produce better quality paths than kinodynamic planning with random controls in fewer iterations and computation time.

* Presented at the Machine Learning for Motion Planning (MLMP) Workshop at ICRA 2021, Xi'an, China

Improving Kinodynamic Planners for Vehicular Navigation with Learned Goal-Reaching Controllers

Oct 08, 2021

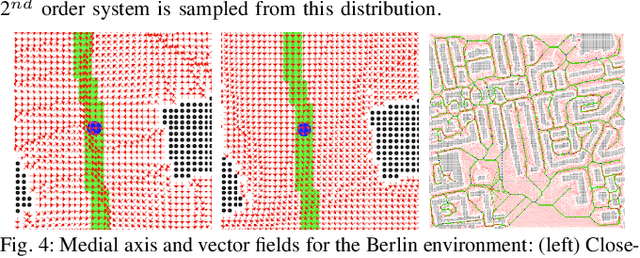

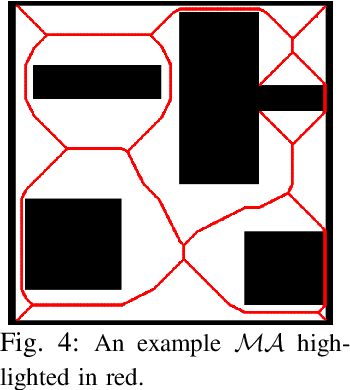

Abstract:This paper aims to improve the path quality and computational efficiency of sampling-based kinodynamic planners for vehicular navigation. It proposes a learning framework for identifying promising controls during the expansion process of sampling-based planners. Given a dynamics model, a reinforcement learning process is trained offline to return a low-cost control that reaches a local goal state (i.e., a waypoint) in the absence of obstacles. By focusing on the system's dynamics and not knowing the environment, this process is data-efficient and takes place once for a robotic system. In this way, it can be reused in different environments. The planner generates online local goal states for the learned controller in an informed manner to bias towards the goal and consecutively in an exploratory, random manner. For the informed expansion, local goal states are generated either via (a) medial axis information in environments with obstacles, or (b) wavefront information for setups with traversability costs. The learning process and the resulting planning framework are evaluated for a first and second-order differential drive system, as well as a physically simulated Segway robot. The results show that the proposed integration of learning and planning can produce higher quality paths than sampling-based kinodynamic planning with random controls in fewer iterations and computation time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge