Timos Papadopoulos

Automated bird sound recognition in realistic settings

Sep 04, 2018

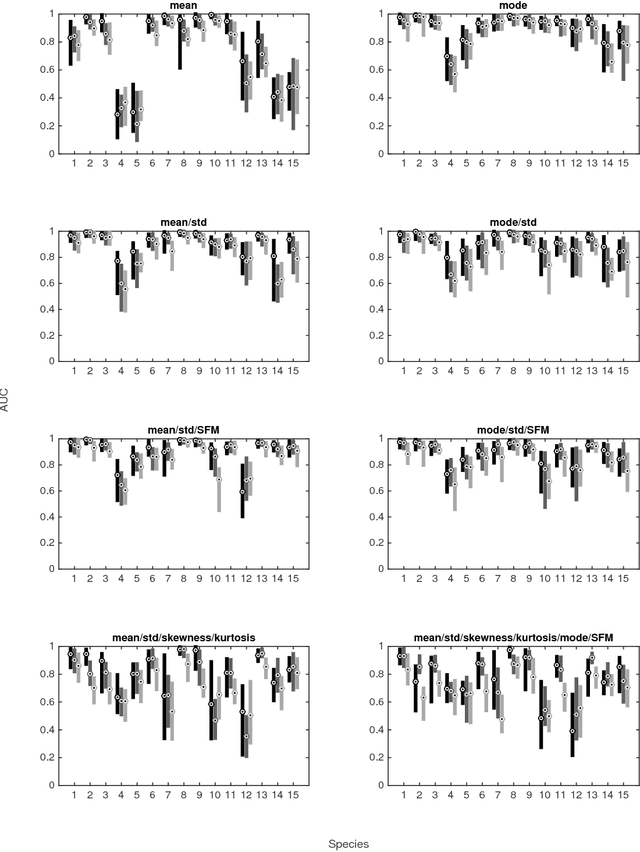

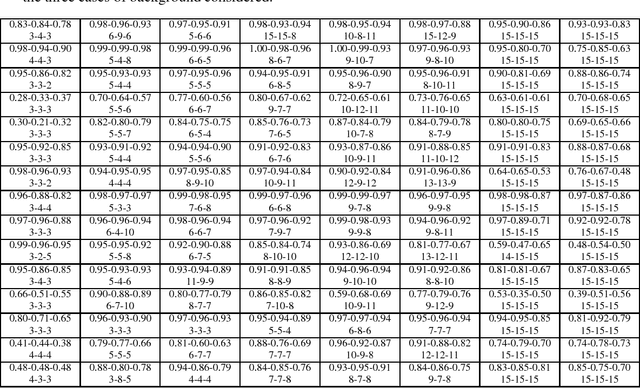

Abstract:We evaluated the effectiveness of an automated bird sound identification system in a situation that emulates a realistic, typical application. We trained classification algorithms on a crowd-sourced collection of bird audio recording data and restricted our training methods to be completely free of manual intervention. The approach is hence directly applicable to the analysis of multiple species collections, with labelling provided by crowd-sourced collection. We evaluated the performance of the bird sound recognition system on a realistic number of candidate classes, corresponding to real conditions. We investigated the use of two canonical classification methods, chosen due to their widespread use and ease of interpretation, namely a k Nearest Neighbour (kNN) classifier with histogram-based features and a Support Vector Machine (SVM) with time-summarisation features. We further investigated the use of a certainty measure, derived from the output probabilities of the classifiers, to enhance the interpretability and reliability of the class decisions. Our results demonstrate that both identification methods achieved similar performance, but we argue that the use of the kNN classifier offers somewhat more flexibility. Furthermore, we show that employing an outcome certainty measure provides a valuable and consistent indicator of the reliability of classification results. Our use of generic training data and our investigation of probabilistic classification methodologies that can flexibly address the variable number of candidate species/classes that are expected to be encountered in the field, directly contribute to the development of a practical bird sound identification system with potentially global application. Further, we show that certainty measures associated with identification outcomes can significantly contribute to the practical usability of the overall system.

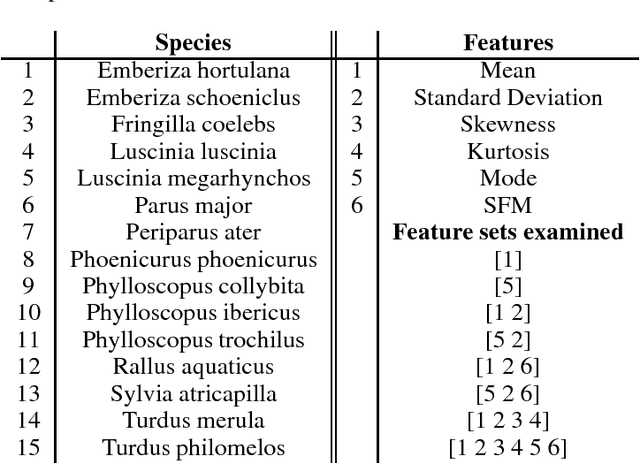

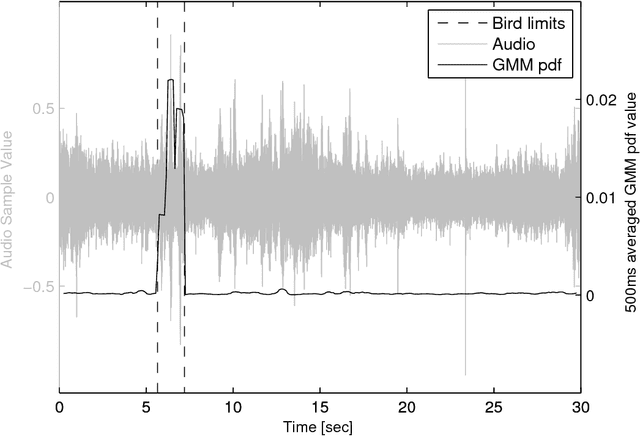

Detecting bird sound in unknown acoustic background using crowdsourced training data

May 24, 2015

Abstract:Biodiversity monitoring using audio recordings is achievable at a truly global scale via large-scale deployment of inexpensive, unattended recording stations or by large-scale crowdsourcing using recording and species recognition on mobile devices. The ability, however, to reliably identify vocalising animal species is limited by the fact that acoustic signatures of interest in such recordings are typically embedded in a diverse and complex acoustic background. To avoid the problems associated with modelling such backgrounds, we build generative models of bird sounds and use the concept of novelty detection to screen recordings to detect sections of data which are likely bird vocalisations. We present detection results against various acoustic environments and different signal-to-noise ratios. We discuss the issues related to selecting the cost function and setting detection thresholds in such algorithms. Our methods are designed to be scalable and automatically applicable to arbitrary selections of species depending on the specific geographic region and time period of deployment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge