Swaroopa Dola

RBR4DNN: Requirements-based Testing of Neural Networks

Apr 03, 2025

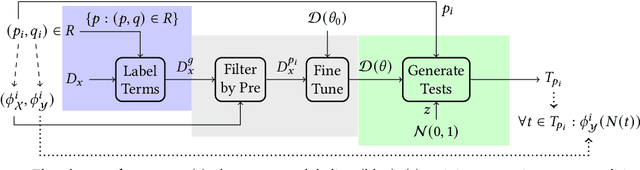

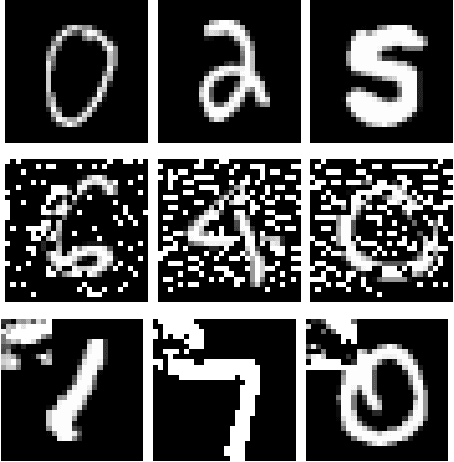

Abstract:Deep neural network (DNN) testing is crucial for the reliability and safety of critical systems, where failures can have severe consequences. Although various techniques have been developed to create robustness test suites, requirements-based testing for DNNs remains largely unexplored -- yet such tests are recognized as an essential component of software validation of critical systems. In this work, we propose a requirements-based test suite generation method that uses structured natural language requirements formulated in a semantic feature space to create test suites by prompting text-conditional latent diffusion models with the requirement precondition and then using the associated postcondition to define a test oracle to judge outputs of the DNN under test. We investigate the approach using fine-tuned variants of pre-trained generative models. Our experiments on the MNIST, CelebA-HQ, ImageNet, and autonomous car driving datasets demonstrate that the generated test suites are realistic, diverse, consistent with preconditions, and capable of revealing faults.

Distribution-Aware Testing of Neural Networks Using Generative Models

Feb 26, 2021

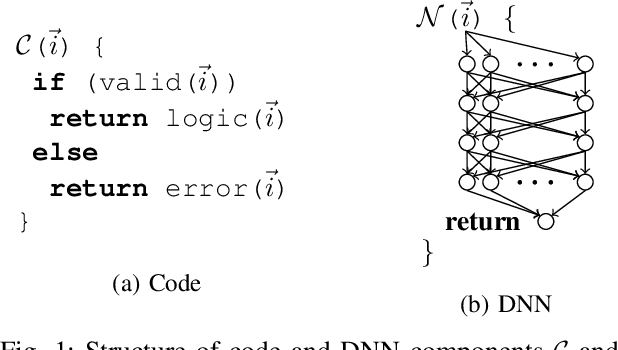

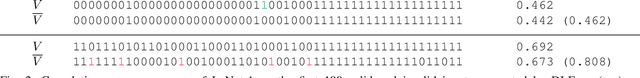

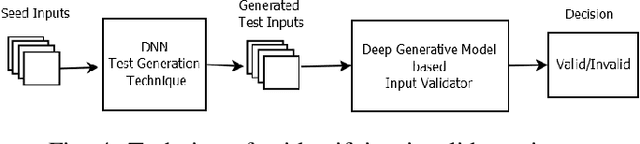

Abstract:The reliability of software that has a Deep Neural Network (DNN) as a component is urgently important today given the increasing number of critical applications being deployed with DNNs. The need for reliability raises a need for rigorous testing of the safety and trustworthiness of these systems. In the last few years, there have been a number of research efforts focused on testing DNNs. However the test generation techniques proposed so far lack a check to determine whether the test inputs they are generating are valid, and thus invalid inputs are produced. To illustrate this situation, we explored three recent DNN testing techniques. Using deep generative model based input validation, we show that all the three techniques generate significant number of invalid test inputs. We further analyzed the test coverage achieved by the test inputs generated by the DNN testing techniques and showed how invalid test inputs can falsely inflate test coverage metrics. To overcome the inclusion of invalid inputs in testing, we propose a technique to incorporate the valid input space of the DNN model under test in the test generation process. Our technique uses a deep generative model-based algorithm to generate only valid inputs. Results of our empirical studies show that our technique is effective in eliminating invalid tests and boosting the number of valid test inputs generated.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge