Swann Perarnau

Offline Reinforcement-Learning-Based Power Control for Application-Agnostic Energy Efficiency

Jan 16, 2026Abstract:Energy efficiency has become an integral aspect of modern computing infrastructure design, impacting the performance, cost, scalability, and durability of production systems. The incorporation of power actuation and sensing capabilities in CPU designs is indicative of this, enabling the deployment of system software that can actively monitor and adjust energy consumption and performance at runtime. While reinforcement learning (RL) would seem ideal for the design of such energy efficiency control systems, online training presents challenges ranging from the lack of proper models for setting up an adequate simulated environment, to perturbation (noise) and reliability issues, if training is deployed on a live system. In this paper we discuss the use of offline reinforcement learning as an alternative approach for the design of an autonomous CPU power controller, with the goal of improving the energy efficiency of parallel applications at runtime without unduly impacting their performance. Offline RL sidesteps the issues incurred by online RL training by leveraging a dataset of state transitions collected from arbitrary policies prior to training. Our methodology applies offline RL to a gray-box approach to energy efficiency, combining online application-agnostic performance data (e.g., heartbeats) and hardware performance counters to ensure that the scientific objectives are met with limited performance degradation. Evaluating our method on a variety of compute-bound and memory-bound benchmarks and controlling power on a live system through Intel's Running Average Power Limit, we demonstrate that such an offline-trained agent can substantially reduce energy consumption at a tolerable performance degradation cost.

A Reinforcement Learning Approach for Performance-aware Reduction in Power Consumption of Data Center Compute Nodes

Aug 15, 2023

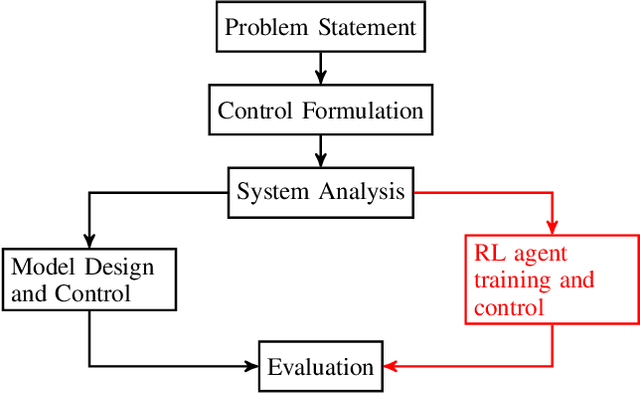

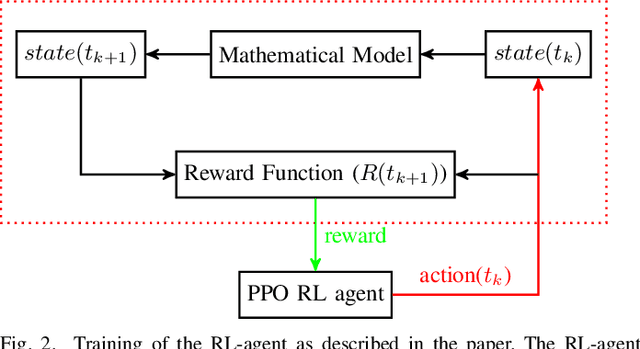

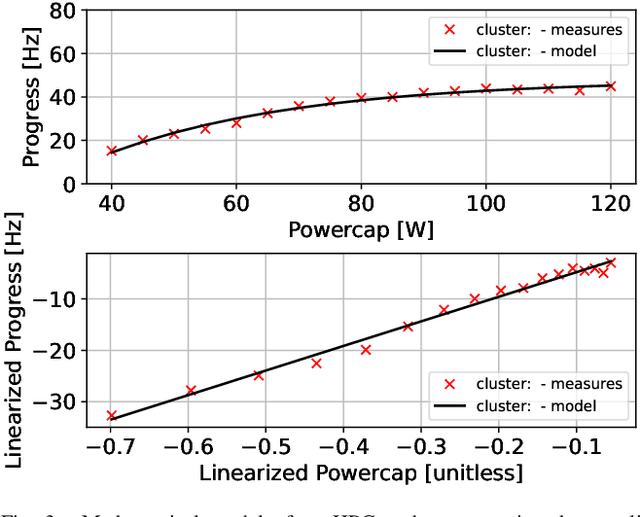

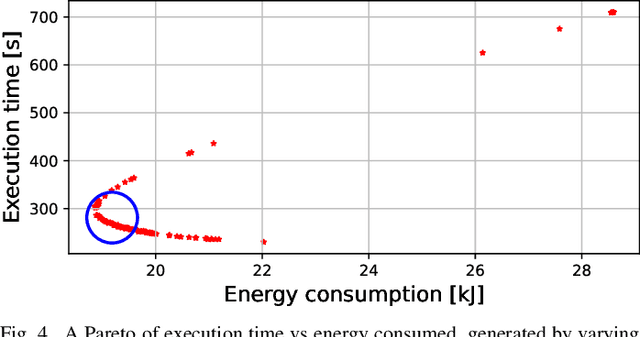

Abstract:As Exascale computing becomes a reality, the energy needs of compute nodes in cloud data centers will continue to grow. A common approach to reducing this energy demand is to limit the power consumption of hardware components when workloads are experiencing bottlenecks elsewhere in the system. However, designing a resource controller capable of detecting and limiting power consumption on-the-fly is a complex issue and can also adversely impact application performance. In this paper, we explore the use of Reinforcement Learning (RL) to design a power capping policy on cloud compute nodes using observations on current power consumption and instantaneous application performance (heartbeats). By leveraging the Argo Node Resource Management (NRM) software stack in conjunction with the Intel Running Average Power Limit (RAPL) hardware control mechanism, we design an agent to control the maximum supplied power to processors without compromising on application performance. Employing a Proximal Policy Optimization (PPO) agent to learn an optimal policy on a mathematical model of the compute nodes, we demonstrate and evaluate using the STREAM benchmark how a trained agent running on actual hardware can take actions by balancing power consumption and application performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge