Stefan Debener

Neural Speech Tracking in a Virtual Acoustic Environment: Audio-Visual Benefit for Unscripted Continuous Speech

Jan 14, 2025Abstract:The audio visual benefit in speech perception, where congruent visual input enhances auditory processing, is well documented across age groups, particularly in challenging listening conditions and among individuals with varying hearing abilities. However, most studies rely on highly controlled laboratory environments with scripted stimuli. Here, we examine the audio visual benefit using unscripted, natural speech from untrained speakers within a virtual acoustic environment. Using electroencephalography (EEG) and cortical speech tracking, we assessed neural responses across audio visual, audio only, visual only, and masked lip conditions to isolate the role of lip movements. Additionally, we analysed individual differences in acoustic and visual features of the speakers, including pitch, jitter, and lip openness, to explore their influence on the audio visual speech tracking benefit. Results showed a significant audio visual enhancement in speech tracking with background noise, with the masked lip condition performing similarly to the audio-only condition, emphasizing the importance of lip movements in adverse listening situations. Our findings reveal the feasibility of cortical speech tracking with naturalistic stimuli and underscore the impact of individual speaker characteristics on audio-visual integration in real world listening contexts.

A Portable Solution for Simultaneous Human Movement and Mobile EEG Acquisition: Readiness Potentials for Basketball Free-throw Shooting

Jan 10, 2025Abstract:Advances in wireless electroencephalography (EEG) technology promise to record brain-electrical activity in everyday situations. To better understand the relationship between brain activity and natural behavior, it is necessary to monitor human movement patterns. Here, we present a pocketable setup consisting of two smartphones to simultaneously capture human posture and EEG signals. We asked 26 basketball players to shoot 120 free throws each. First, we investigated whether our setup allows us to capture the readiness potential (RP) that precedes voluntary actions. Second, we investigated whether the RP differs between successful and unsuccessful free-throw attempts. The results confirmed the presence of the RP, but the amplitude of the RP was not related to shooting success. However, offline analysis of real-time human pose signals derived from a smartphone camera revealed pose differences between successful and unsuccessful shots for some individuals. We conclude that a highly portable, low-cost and lightweight acquisition setup, consisting of two smartphones and a head-mounted wireless EEG amplifier, is sufficient to monitor complex human movement patterns and associated brain dynamics outside the laboratory.

Mobile EEG artifact correction on limited hardware using artifact subspace recon- struction

Apr 28, 2022

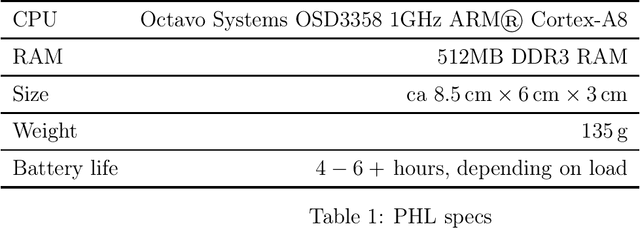

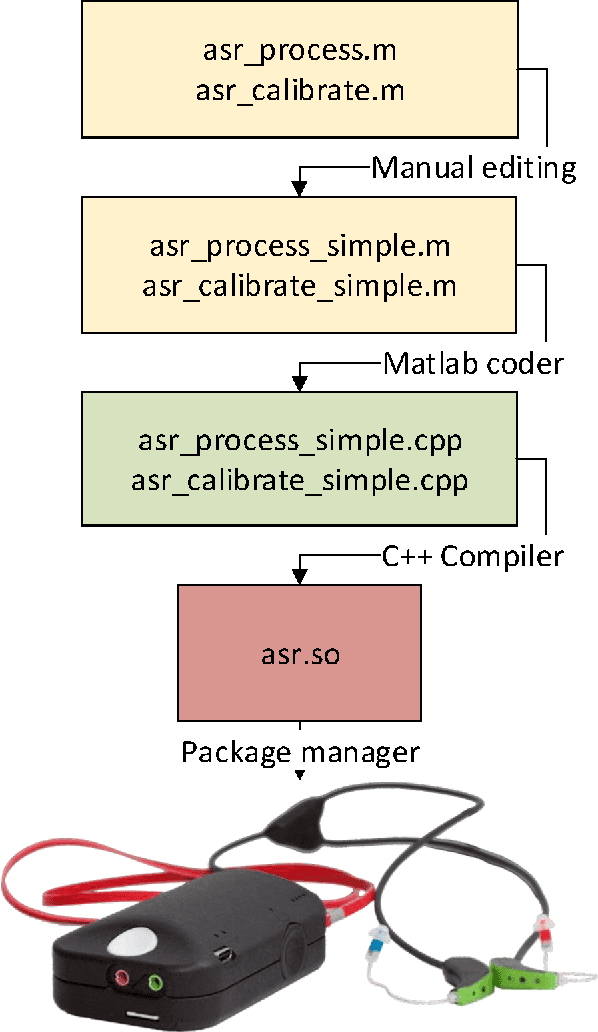

Abstract:Biological data like electroencephalography (EEG) are typically contaminated by unwanted signals, called artifacts. Therefore, many applications dealing with biological data with low signal-to-noise ratio require robust artifact correction. For some applications like brain-computer-interfaces (BCI), the artifact correction needs to be real-time capable. Artifact subspace reconstruction (ASR) is a statistical method for artifact reduction in EEG. However, in its current implementation, ASR cannot be used in mobile data recordings using limited hardware easily. In this report, we add to the growing field of portable, online signal processing methods by describing an implementation of ASR for limited hardware like single-board computers. We describe the architecture, the process of translating and compiling a Matlab codebase for a research platform, and a set of validation tests using publicly available data sets. The implementation of ASR on limited, portable hardware facilitates the online interpretation of EEG signals acquired outside of the laboratory environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge