Soochang Lee

Hardware Implementation of Spiking Neural Networks Using Time-To-First-Spike Encoding

Jun 09, 2020

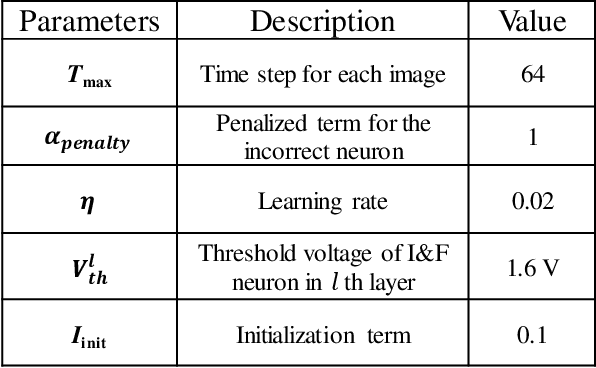

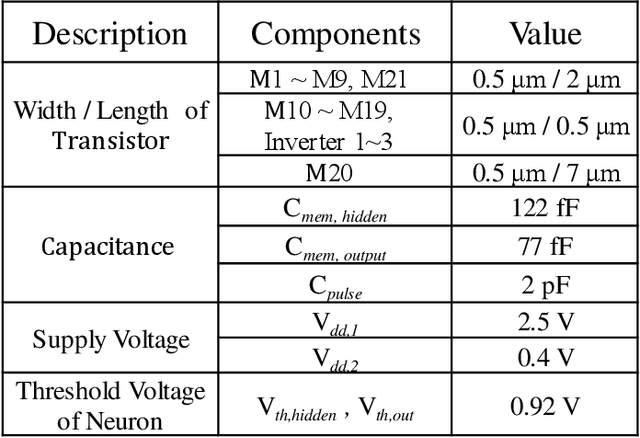

Abstract:Hardware-based spiking neural networks (SNNs) are regarded as promising candidates for the cognitive computing system due to low power consumption and highly parallel operation. In this work, we train the SNN in which the firing time carries information using temporal backpropagation. The temporally encoded SNN with 512 hidden neurons showed an accuracy of 96.90% for the MNIST test set. Furthermore, the effect of the device variation on the accuracy in temporally encoded SNN is investigated and compared with that of the rate-encoded network. In a hardware configuration of our SNN, NOR-type analog memory having an asymmetric floating gate is used as a synaptic device. In addition, we propose a neuron circuit including a refractory period generator for temporally encoded SNN. The performance of the 2-layer neural network consisting of synapses and proposed neurons is evaluated through circuit simulation using SPICE. The network with 128 hidden neurons showed an accuracy of 94.9%, a 0.1% reduction compared to that of the system simulation of the MNIST dataset. Finally, the latency and power consumption of each block constituting the temporal network is analyzed and compared with those of the rate-encoded network depending on the total time step. Assuming that the total time step number of the network is 256, the temporal network consumes 15.12 times lower power than the rate-encoded network and can make decisions 5.68 times faster.

Unsupervised Online Learning With Multiple Postsynaptic Neurons Based on Spike-Timing-Dependent Plasticity Using a TFT-Type NOR Flash Memory Array

Nov 17, 2018

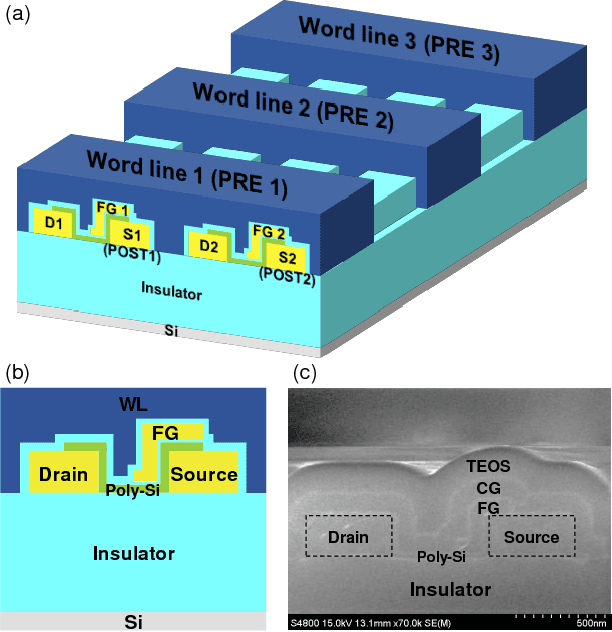

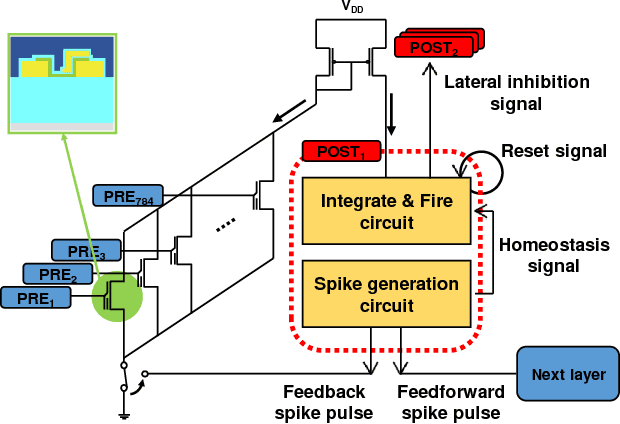

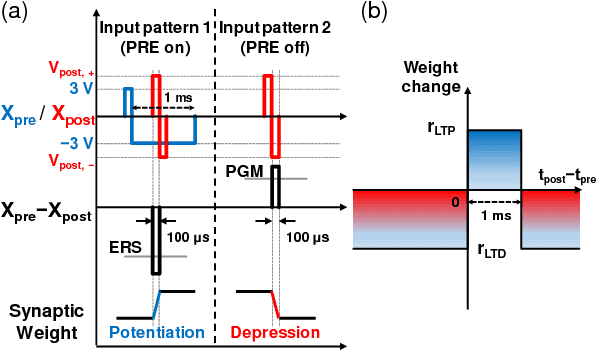

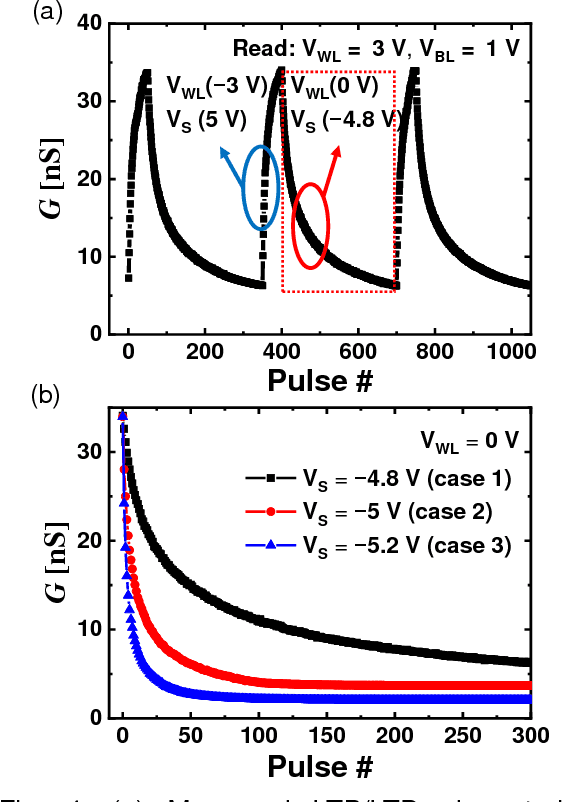

Abstract:We present a two-layer fully connected neuromorphic system based on a thin-film transistor (TFT)-type NOR flash memory array with multiple postsynaptic (POST) neurons. Unsupervised online learning by spike-timing-dependent plasticity (STDP) on the binary MNIST handwritten datasets is implemented, and its recognition result is determined by measuring firing rate of POST neurons. Using a proposed learning scheme, we investigate the impact of the number of POST neurons in terms of recognition rate. In this neuromorphic system, lateral inhibition function and homeostatic property are exploited for competitive learning of multiple POST neurons. The simulation results demonstrate unsupervised online learning of the full black-and-white MNIST handwritten digits by STDP, which indicates the performance of pattern recognition and classification without preprocessing of input patterns.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge