Simon Letzgus

XpertAI: uncovering model strategies for sub-manifolds

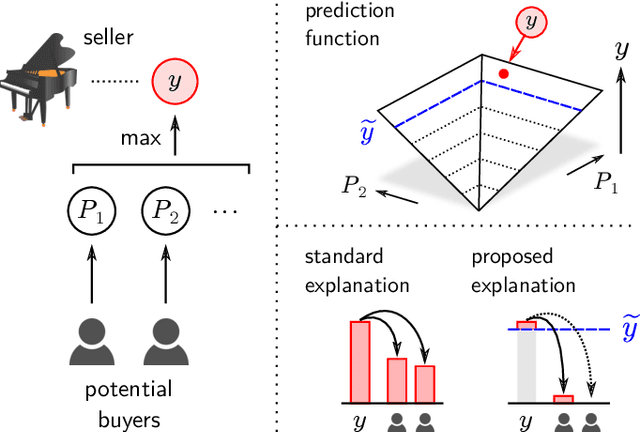

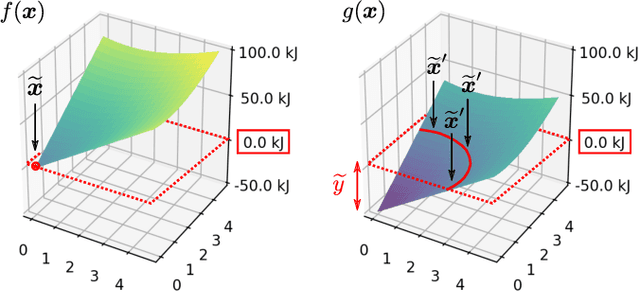

Mar 12, 2024Abstract:In recent years, Explainable AI (XAI) methods have facilitated profound validation and knowledge extraction from ML models. While extensively studied for classification, few XAI solutions have addressed the challenges specific to regression models. In regression, explanations need to be precisely formulated to address specific user queries (e.g.\ distinguishing between `Why is the output above 0?' and `Why is the output above 50?'). They should furthermore reflect the model's behavior on the relevant data sub-manifold. In this paper, we introduce XpertAI, a framework that disentangles the prediction strategy into multiple range-specific sub-strategies and allows the formulation of precise queries about the model (the `explanandum') as a linear combination of those sub-strategies. XpertAI is formulated generally to work alongside popular XAI attribution techniques, based on occlusion, gradient integration, or reverse propagation. Qualitative and quantitative results, demonstrate the benefits of our approach.

Towards transparent and robust data-driven wind turbine power curve models

Apr 19, 2023Abstract:Wind turbine power curve models translate ambient conditions into turbine power output. They are essential for energy yield prediction and turbine performance monitoring. In recent years, data-driven machine learning methods have outperformed parametric, physics-informed approaches. However, they are often criticised for being opaque "black boxes" which raises concerns regarding their robustness in non-stationary environments, such as faced by wind turbines. We, therefore, introduce an explainable artificial intelligence (XAI) framework to investigate and validate strategies learned by data-driven power curve models from operational SCADA data. It combines domain-specific considerations with Shapley Values and the latest findings from XAI for regression. Our results suggest, that learned strategies can be better indicators for model robustness than validation or test set errors. Moreover, we observe that highly complex, state-of-the-art ML models are prone to learn physically implausible strategies. Consequently, we compare several measures to ensure physically reasonable model behaviour. Lastly, we propose the utilization of XAI in the context of wind turbine performance monitoring, by disentangling environmental and technical effects that cause deviations from an expected turbine output. We hope, our work can guide domain experts towards training and selecting more transparent and robust data-driven wind turbine power curve models.

Toward Explainable AI for Regression Models

Dec 21, 2021

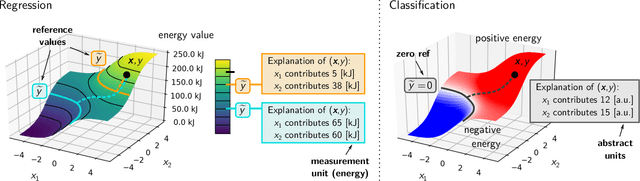

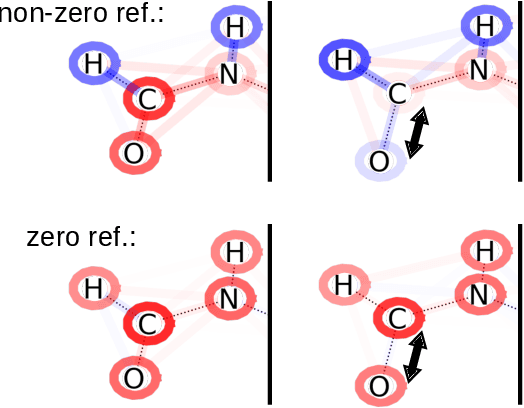

Abstract:In addition to the impressive predictive power of machine learning (ML) models, more recently, explanation methods have emerged that enable an interpretation of complex non-linear learning models such as deep neural networks. Gaining a better understanding is especially important e.g. for safety-critical ML applications or medical diagnostics etc. While such Explainable AI (XAI) techniques have reached significant popularity for classifiers, so far little attention has been devoted to XAI for regression models (XAIR). In this review, we clarify the fundamental conceptual differences of XAI for regression and classification tasks, establish novel theoretical insights and analysis for XAIR, provide demonstrations of XAIR on genuine practical regression problems, and finally discuss the challenges remaining for the field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge