Shahin Tajik

A Survey and Perspective on Artificial Intelligence for Security-Aware Electronic Design Automation

Apr 21, 2022

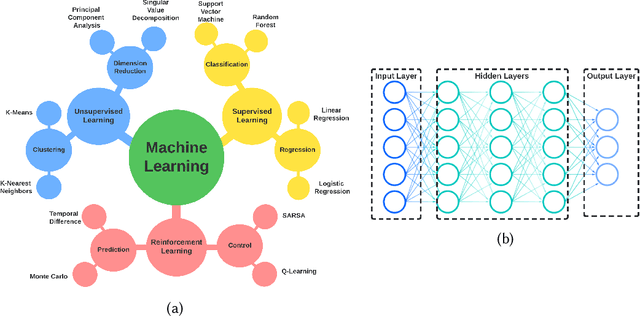

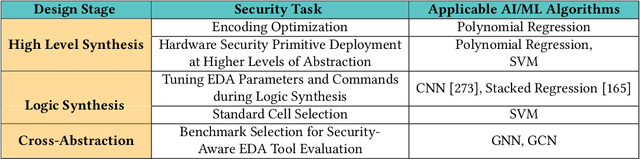

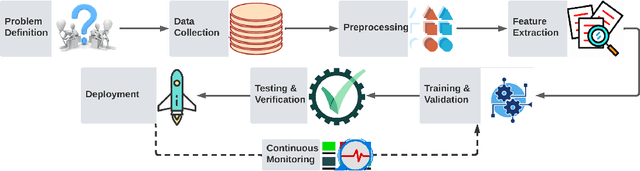

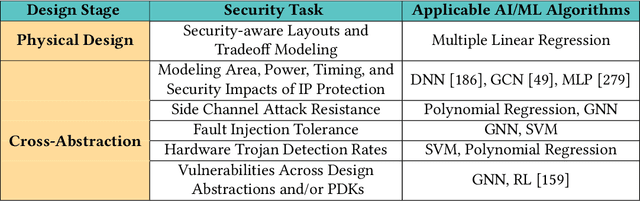

Abstract:Artificial intelligence (AI) and machine learning (ML) techniques have been increasingly used in several fields to improve performance and the level of automation. In recent years, this use has exponentially increased due to the advancement of high-performance computing and the ever increasing size of data. One of such fields is that of hardware design; specifically the design of digital and analog integrated circuits~(ICs), where AI/ ML techniques have been extensively used to address ever-increasing design complexity, aggressive time-to-market, and the growing number of ubiquitous interconnected devices (IoT). However, the security concerns and issues related to IC design have been highly overlooked. In this paper, we summarize the state-of-the-art in AL/ML for circuit design/optimization, security and engineering challenges, research in security-aware CAD/EDA, and future research directions and needs for using AI/ML for security-aware circuit design.

Physically Unclonable Functions and AI: Two Decades of Marriage

Aug 26, 2020

Abstract:The current chapter aims at establishing a relationship between artificial intelligence (AI) and hardware security. Such a connection between AI and software security has been confirmed and well-reviewed in the relevant literature. The main focus here is to explore the methods borrowed from AI to assess the security of a hardware primitive, namely physically unclonable functions (PUFs), which has found applications in cryptographic protocols, e.g., authentication and key generation. Metrics and procedures devised for this are further discussed. Moreover, By reviewing PUFs designed by applying AI techniques, we give insight into future research directions in this area.

Artificial Neural Networks and Fault Injection Attacks

Aug 17, 2020

Abstract:This chapter is on the security assessment of artificial intelligence (AI) and neural network (NN) accelerators in the face of fault injection attacks. More specifically, it discusses the assets on these platforms and compares them with ones known and well-studied in the field of cryptographic systems. This is a crucial step that must be taken in order to define the threat models precisely. With respect to that, fault attacks mounted on NNs and AI accelerators are explored.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge