Sergei Monakhov

Probabilistic Method of Measuring Linguistic Productivity

Aug 24, 2023Abstract:In this paper I propose a new way of measuring linguistic productivity that objectively assesses the ability of an affix to be used to coin new complex words and, unlike other popular measures, is not directly dependent upon token frequency. Specifically, I suggest that linguistic productivity may be viewed as the probability of an affix to combine with a random base. The advantages of this approach include the following. First, token frequency does not dominate the productivity measure but naturally influences the sampling of bases. Second, we are not just counting attested word types with an affix but rather simulating the construction of these types and then checking whether they are attested in the corpus. Third, a corpus-based approach and randomised design assure that true neologisms and words coined long ago have equal chances to be selected. The proposed algorithm is evaluated both on English and Russian data. The obtained results provide some valuable insights into the relation of linguistic productivity to the number of types and tokens. It looks like burgeoning linguistic productivity manifests itself in an increasing number of types. However, this process unfolds in two stages: first comes the increase in high-frequency items, and only then follows the increase in low-frequency items.

Understanding Troll Writing as a Linguistic Phenomenon

Nov 14, 2019

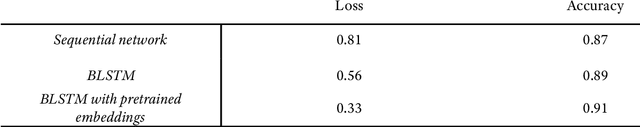

Abstract:The current study yielded a number of important findings. We managed to build a neural network that achieved an accuracy score of 91 per cent in classifying troll and genuine tweets. By means of regression analysis, we identified a number of features that make a tweet more susceptible to correct labelling and found that they are inherently present in troll tweets as a special type of discourse. We hypothesised that those features are grounded in the sociolinguistic limitations of troll writing, which can be best described as a combination of two factors: speaking with a purpose and trying to mask the purpose of speaking. Next, we contended that the orthogonal nature of these factors must necessarily result in the skewed distribution of many different language parameters of troll messages. Having chosen as an example distribution of the topics and vocabulary associated with those topics, we showed some very pronounced distributional anomalies, thus confirming our prediction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge