Seiji Isotani

A Mixed User-Centered Approach to Enable Augmented Intelligence in Intelligent Tutoring Systems: The Case of MathAIde app

Jul 31, 2025Abstract:Integrating Artificial Intelligence in Education (AIED) aims to enhance learning experiences through technologies like Intelligent Tutoring Systems (ITS), offering personalized learning, increased engagement, and improved retention rates. However, AIED faces three main challenges: the critical role of teachers in the design process, the limitations and reliability of AI tools, and the accessibility of technological resources. Augmented Intelligence (AuI) addresses these challenges by enhancing human capabilities rather than replacing them, allowing systems to suggest solutions. In contrast, humans provide final assessments, thus improving AI over time. In this sense, this study focuses on designing, developing, and evaluating MathAIde, an ITS that corrects mathematics exercises using computer vision and AI and provides feedback based on photos of student work. The methodology included brainstorming sessions with potential users, high-fidelity prototyping, A/B testing, and a case study involving real-world classroom environments for teachers and students. Our research identified several design possibilities for implementing AuI in ITSs, emphasizing a balance between user needs and technological feasibility. Prioritization and validation through prototyping and testing highlighted the importance of efficiency metrics, ultimately leading to a solution that offers pre-defined remediation alternatives for teachers. Real-world deployment demonstrated the usefulness of the proposed solution. Our research contributes to the literature by providing a usable, teacher-centered design approach that involves teachers in all design phases. As a practical implication, we highlight that the user-centered design approach increases the usefulness and adoption potential of AIED systems, especially in resource-limited environments.

Automating Gamification Personalization: To the User and Beyond

Jan 14, 2021

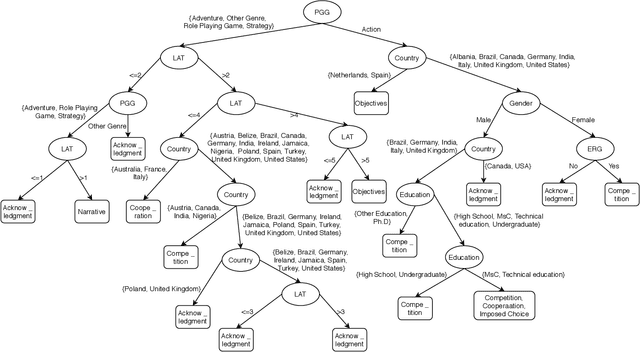

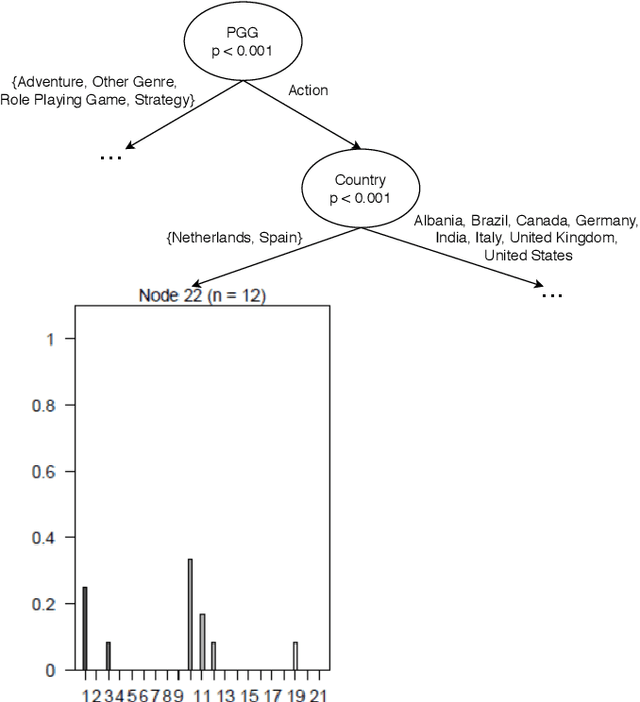

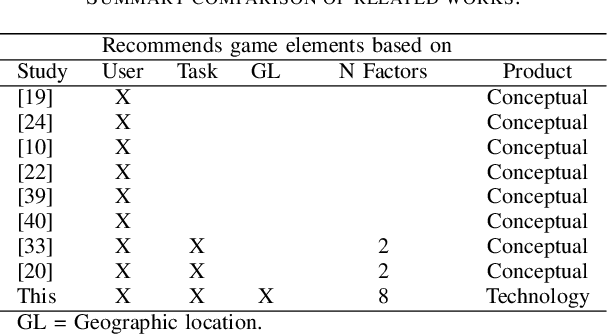

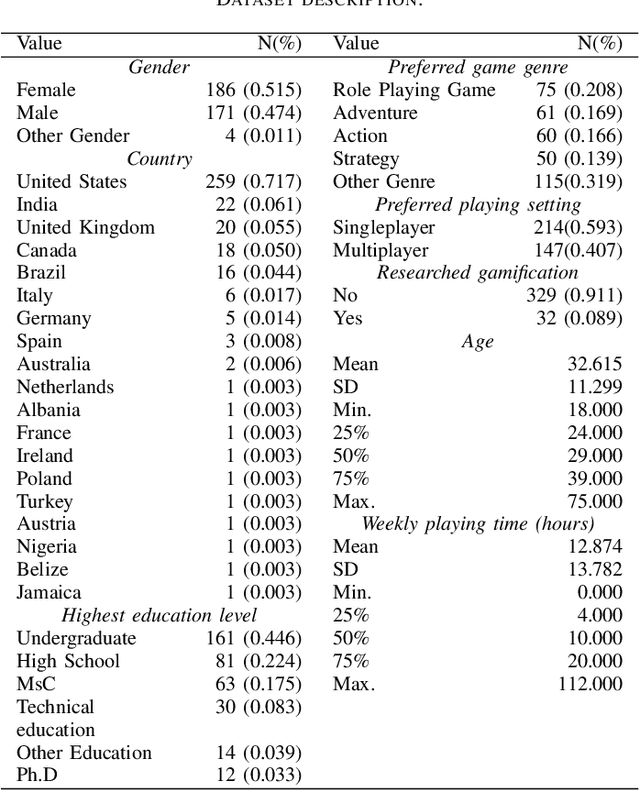

Abstract:Personalized gamification explores knowledge about the users to tailor gamification designs to improve one-size-fits-all gamification. The tailoring process should simultaneously consider user and contextual characteristics (e.g., activity to be done and geographic location), which leads to several occasions to tailor. Consequently, tools for automating gamification personalization are needed. The problems that emerge are that which of those characteristics are relevant and how to do such tailoring are open questions, and that the required automating tools are lacking. We tackled these problems in two steps. First, we conducted an exploratory study, collecting participants' opinions on the game elements they consider the most useful for different learning activity types (LAT) via survey. Then, we modeled opinions through conditional decision trees to address the aforementioned tailoring process. Second, as a product from the first step, we implemented a recommender system that suggests personalized gamification designs (which game elements to use), addressing the problem of automating gamification personalization. Our findings i) present empirical evidence that LAT, geographic locations, and other user characteristics affect users' preferences, ii) enable defining gamification designs tailored to user and contextual features simultaneously, and iii) provide technological aid for those interested in designing personalized gamification. The main implications are that demographics, game-related characteristics, geographic location, and LAT to be done, as well as the interaction between different kinds of information (user and contextual characteristics), should be considered in defining gamification designs and that personalizing gamification designs can be improved with aid from our recommender system.

FOCA: A Methodology for Ontology Evaluation

Sep 02, 2017

Abstract:Modeling an ontology is a hard and time-consuming task. Although methodologies are useful for ontologists to create good ontologies, they do not help with the task of evaluating the quality of the ontology to be reused. For these reasons, it is imperative to evaluate the quality of the ontology after constructing it or before reusing it. Few studies usually present only a set of criteria and questions, but no guidelines to evaluate the ontology. The effort to evaluate an ontology is very high as there is a huge dependence on the evaluator's expertise to understand the criteria and questions in depth. Moreover, the evaluation is still very subjective. This study presents a novel methodology for ontology evaluation, taking into account three fundamental principles: i) it is based on the Goal, Question, Metric approach for empirical evaluation; ii) the goals of the methodologies are based on the roles of knowledge representations combined with specific evaluation criteria; iii) each ontology is evaluated according to the type of ontology. The methodology was empirically evaluated using different ontologists and ontologies of the same domain. The main contributions of this study are: i) defining a step-by-step approach to evaluate the quality of an ontology; ii) proposing an evaluation based on the roles of knowledge representations; iii) the explicit difference of the evaluation according to the type of the ontology iii) a questionnaire to evaluate the ontologies; iv) a statistical model that automatically calculates the quality of the ontologies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge