Sarod Yatawatta

Energy and polarization based on-line interference mitigation in radio interferometry

Dec 19, 2024Abstract:Radio frequency interference (RFI) is a persistent contaminant in terrestrial radio astronomy. While new radio interferometers are becoming operational, novel sources of RFI are also emerging. In order to strengthen the mitigation of RFI in modern radio interferometers, we propose an on-line RFI mitigation scheme that can be run in the correlator of such interferometers. We combine statistics based on the energy as well as the polarization alignment of the correlated signal to develop an on-line RFI mitigation scheme that can be applied to a data stream produced by the correlator in real-time, especially targeted at low duty-cycle or transient RFI detection. In order to improve the computational efficiency, we explore the use of both single precision and half precision floating point operations in implementing the RFI mitigation algorithm. This ideally suits its deployment in accelerator computing devices such as graphics processing units (GPUs) as used by the LOFAR correlator. We provide results based on real data to demonstrate the efficacy of the proposed method.

Hint assisted reinforcement learning: an application in radio astronomy

Jan 10, 2023Abstract:Model based reinforcement learning has proven to be more sample efficient than model free methods. On the other hand, the construction of a dynamics model in model based reinforcement learning has increased complexity. Data processing tasks in radio astronomy are such situations where the original problem which is being solved by reinforcement learning itself is the creation of a model. Fortunately, many methods based on heuristics or signal processing do exist to perform the same tasks and we can leverage them to propose the best action to take, or in other words, to provide a `hint'. We propose to use `hints' generated by the environment as an aid to the reinforcement learning process mitigating the complexity of model construction. We modify the soft actor critic algorithm to use hints and use the alternating direction method of multipliers algorithm with inequality constraints to train the agent. Results in several environments show that we get the increased sample efficiency by using hints as compared to model free methods.

Deep reinforcement learning for smart calibration of radio telescopes

Feb 05, 2021

Abstract:Modern radio telescopes produce unprecedented amounts of data, which are passed through many processing pipelines before the delivery of scientific results. Hyperparameters of these pipelines need to be tuned by hand to produce optimal results. Because many thousands of observations are taken during a lifetime of a telescope and because each observation will have its unique settings, the fine tuning of pipelines is a tedious task. In order to automate this process of hyperparameter selection in data calibration pipelines, we introduce the use of reinforcement learning. We use a reinforcement learning technique called twin delayed deep deterministic policy gradient (TD3) to train an autonomous agent to perform this fine tuning. For the sake of generalization, we consider the pipeline to be a black-box system where only an interpreted state of the pipeline is used by the agent. The autonomous agent trained in this manner is able to determine optimal settings for diverse observations and is therefore able to perform 'smart' calibration, minimizing the need for human intervention.

Stochastic Calibration of Radio Interferometers

Mar 03, 2020

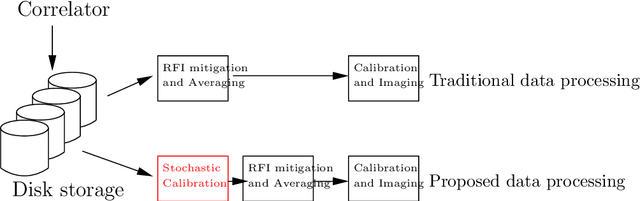

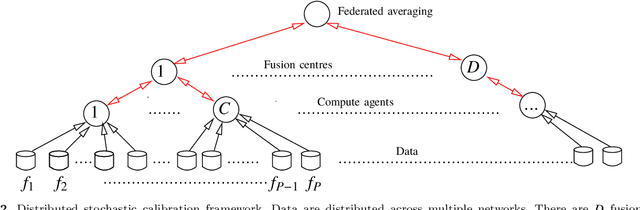

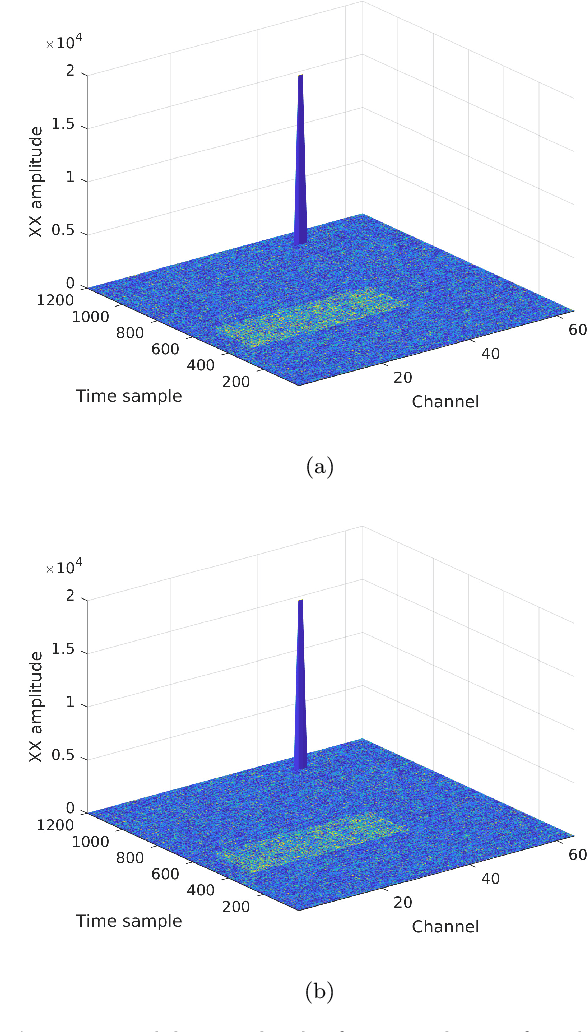

Abstract:With ever increasing data rates produced by modern radio telescopes like LOFAR and future telescopes like the SKA, many data processing steps are overwhelmed by the amount of data that needs to be handled using limited compute resources. Calibration is one such operation that dominates the overall data processing computational cost, nonetheless, it is an essential operation to reach many science goals. Calibration algorithms do exist that scale well with the number of stations of an array and the number of directions being calibrated. However, the remaining bottleneck is the raw data volume, which scales with the number of baselines, and which is proportional to the square of the number of stations. We propose a 'stochastic' calibration strategy where we only read in a mini-batch of data for obtaining calibration solutions, as opposed to reading the full batch of data being calibrated. Nonetheless, we obtain solutions that are valid for the full batch of data. Normally, data need to be averaged before calibration is performed to accommodate the data in size-limited compute memory. Stochastic calibration overcomes the need for data averaging before any calibration can be performed, and offers many advantages including: enabling the mitigation of faint radio frequency interference; better removal of strong celestial sources from the data; and better detection and spatial localization of fast radio transients.

A Stochastic LBFGS Algorithm for Radio Interferometric Calibration

Apr 13, 2019

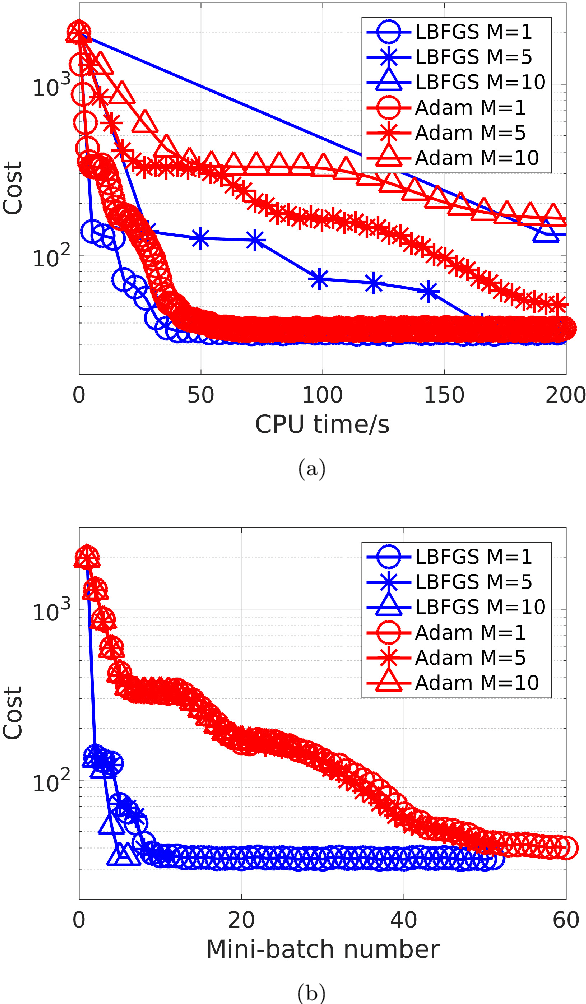

Abstract:We present a stochastic, limited-memory Broyden Fletcher Goldfarb Shanno (LBFGS) algorithm that is suitable for handling very large amounts of data. A direct application of this algorithm is radio interferometric calibration of raw data at fine time and frequency resolution. Almost all existing radio interferometric calibration algorithms assume that it is possible to fit the dataset being calibrated into memory. Therefore, the raw data is averaged in time and frequency to reduce its size by many orders of magnitude before calibration is performed. However, this averaging is detrimental for the detection of some signals of interest that have narrow bandwidth and time duration such as fast radio bursts (FRBs). Using the proposed algorithm, it is possible to calibrate data at such a fine resolution that they cannot be entirely loaded into memory, thus preserving such signals. As an additional demonstration, we use the proposed algorithm for training deep neural networks and compare the performance against the mainstream first order optimization algorithms that are used in deep learning.

Statistical Performance of Radio Interferometric Calibration

Feb 27, 2019

Abstract:Calibration is an essential step in radio interferometric data processing that corrects the data for systematic errors and in addition, subtracts bright foreground interference to reveal weak signals hidden in the residual. These weak and unknown signals are much sought after to reach many science goals but the effect of calibration on such signals is an ever present concern. The main reason for this is the incompleteness of the model used in calibration. Distributed calibration based on consensus optimization has been shown to mitigate the effect due to model incompleteness by calibrating data covering a wide bandwidth in a computationally efficient manner. In this paper, we study the statistical performance of direction dependent distributed calibration, i.e., the distortion caused by calibration on the residual statistics. In order to study this, we consider the mapping between the input uncalibrated data and the output residual data. We derive an analytical relationship for the influence of the input on the residual and use this to find the relationship between the input and output probability density functions. Using simulations we show that the smallest eigenvalue of the Jacobian of this mapping is a reliable indicator of the statistical performance of calibration. The analysis developed in this paper can also be applied to other data processing steps in radio interferometry such as imaging and foreground subtraction as well as to many other machine learning problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge