Saif S. S. Al-Wahaibi

Improving Convolutional Neural Networks for Fault Diagnosis by Assimilating Global Features

Oct 03, 2022

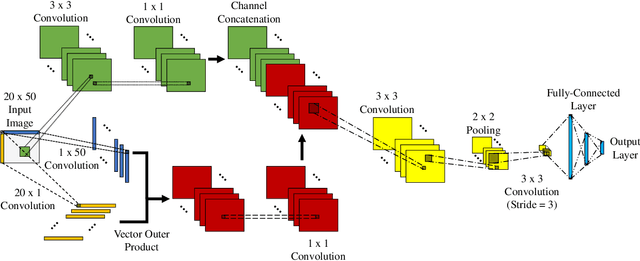

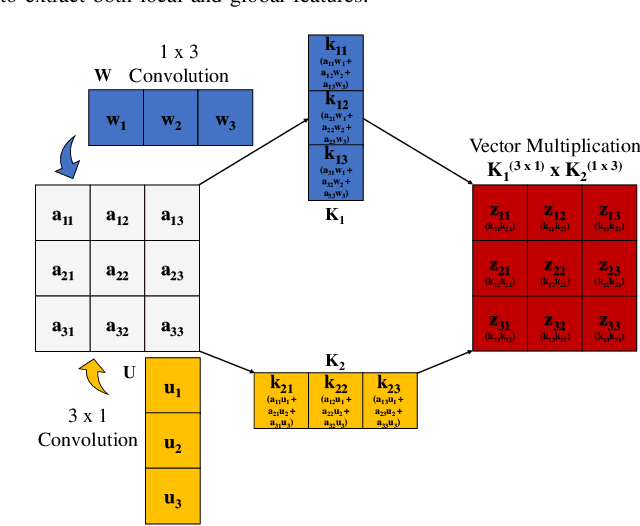

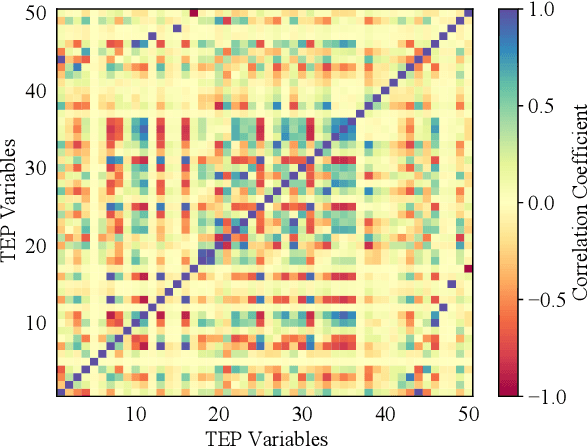

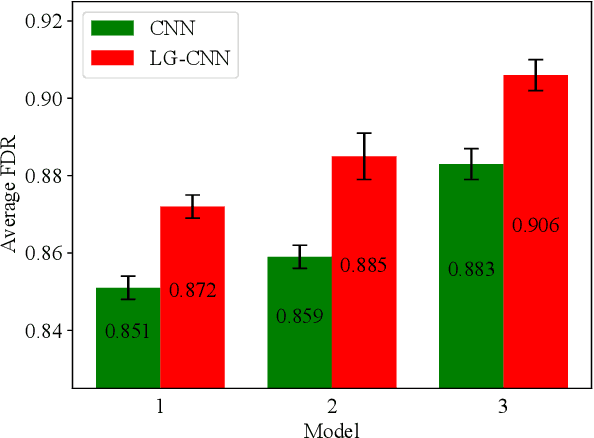

Abstract:Deep learning techniques have become prominent in modern fault diagnosis for complex processes. In particular, convolutional neural networks (CNNs) have shown an appealing capacity to deal with multivariate time-series data by converting them into images. However, existing CNN techniques mainly focus on capturing local or multi-scale features from input images. A deep CNN is often required to indirectly extract global features, which are critical to describe the images converted from multivariate dynamical data. This paper proposes a novel local-global CNN (LG-CNN) architecture that directly accounts for both local and global features for fault diagnosis. Specifically, the local features are acquired by traditional local kernels whereas global features are extracted by using 1D tall and fat kernels that span the entire height and width of the image. Both local and global features are then merged for classification using fully-connected layers. The proposed LG-CNN is validated on the benchmark Tennessee Eastman process (TEP) dataset. Comparison with traditional CNN shows that the proposed LG-CNN can greatly improve the fault diagnosis performance without significantly increasing the model complexity. This is attributed to the much wider local receptive field created by the LG-CNN than that by CNN. The proposed LG-CNN architecture can be easily extended to other image processing and computer vision tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge