Ruslan Vasilev

Calibration of Neural Networks

Mar 19, 2023

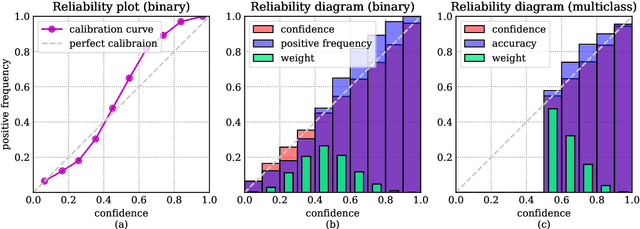

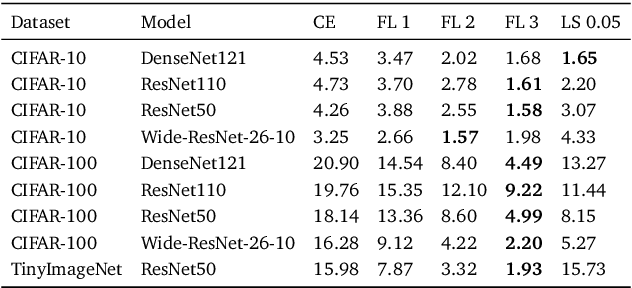

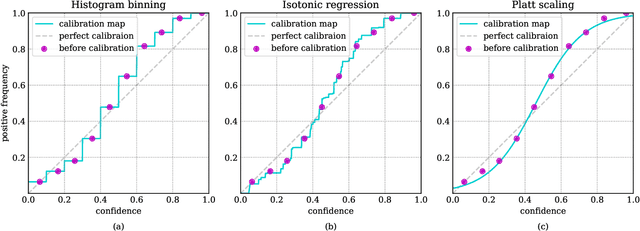

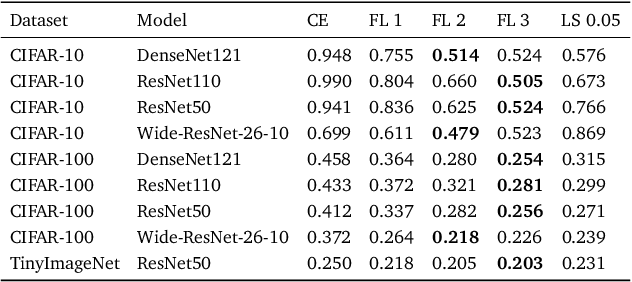

Abstract:Neural networks solving real-world problems are often required not only to make accurate predictions but also to provide a confidence level in the forecast. The calibration of a model indicates how close the estimated confidence is to the true probability. This paper presents a survey of confidence calibration problems in the context of neural networks and provides an empirical comparison of calibration methods. We analyze problem statement, calibration definitions, and different approaches to evaluation: visualizations and scalar measures that estimate whether the model is well-calibrated. We review modern calibration techniques: based on post-processing or requiring changes in training. Empirical experiments cover various datasets and models, comparing calibration methods according to different criteria.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge