Ruoyun Chen

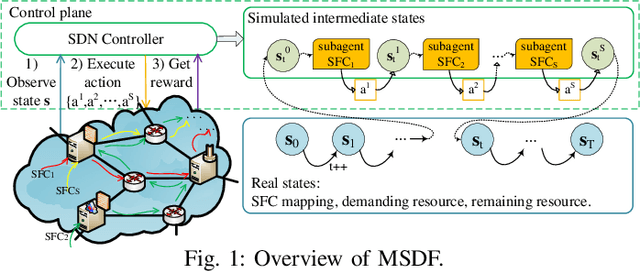

MSDF: A Deep Reinforcement Learning Framework for Service Function Chain Migration

Nov 13, 2019

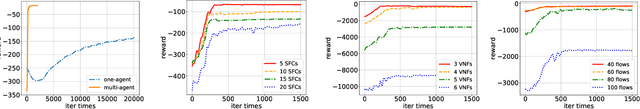

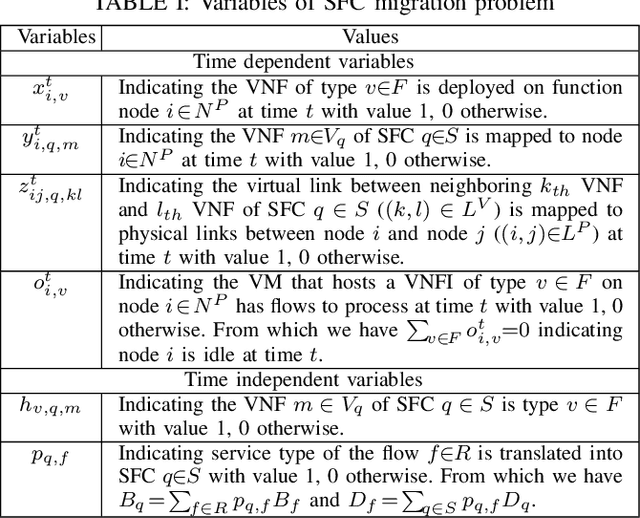

Abstract:Under dynamic traffic, service function chain (SFC) migration is considered as an effective way to improve resource utilization. However, the lack of future network information leads to non-optimal solutions, which motivates us to study reinforcement learning based SFC migration from a long-term perspective. In this paper, we formulate the SFC migration problem as a minimization problem with the objective of total network operation cost under constraints of users' quality of service. We firstly design a deep Q-network based algorithm to solve single SFC migration problem, which can adjust migration strategy online without knowing future information. Further, a novel multi-agent cooperative framework, called MSDF, is proposed to address the challenge of considering multiple SFC migration on the basis of single SFC migration. MSDF reduces the complexity thus accelerates the convergence speed, especially in large scale networks. Experimental results demonstrate that MSDF outperforms typical heuristic algorithms under various scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge